From the Burrow

TaleSpire Dev Log 351

Hey again folks,

With AOEs shipped, I’ve returned to the lasso tool for the group-movement feature.

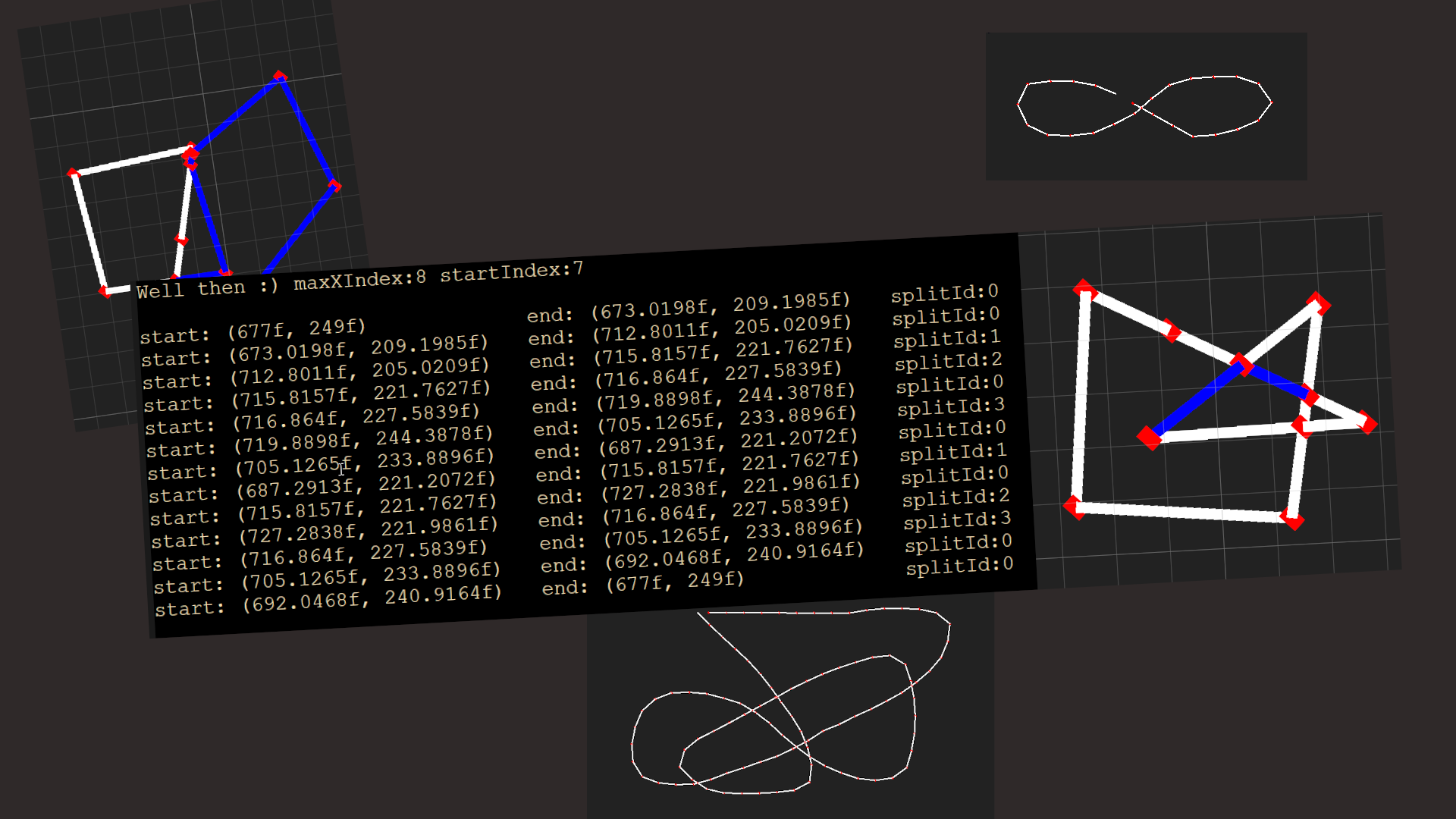

Previously I worked on decomposing the lasso path into simple polygons[0]. This was required for the next step, which is using a technique called “ear clipping” to make a mesh of the selected area.

If you are interested in how it works, then this video is an excellent place to start. It’s very simple, but I still managed to make it take a day :P

I made a separate project for the experiment to speed up compile times. This video shows the test code in action:

An additional requirement for ear-clipping to work is that no three consecutive vertices are colinear. It’s a bit faint, but this clip shows that when the verts are in a line, the result is one triangle rather than two.

Now I have this working, I will clean up the code, so it is ready to combine with the previous lasso work. I’ve been careful to write the code in a fashion that lends itself to porting to Burst. As a single lasso path can result in multiple polygons, I will use Unity’s job system to mesh them concurrently.

That’s the lot for today. It’s been wonderful seeing the response to AOEs. Hopefully, it won’t be too long until I can get this into Beta.

Ciao.

[0] I am not yet satisfied with my approach, but it’s good enough to allow me to write the rest of the lasso implementation.

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team

TaleSpire Dev Log 350

Heya folks!

It’s been a good week for feature work, so let’s get into it.

AOEs

With Ree back from vacation, he has been let loose on making AOEs. He’s made a more low-key variant of the ruler visual for the AOEs that is tintable. So yes, we will have color options for the AOEs on launch (this is landing in Beta in an hour or so). He’s made it so that, as well as the keybinding, you can right-click on a ruler and click a menu option to turn it into an AOE marker. He’s also made it so that the distance/angle indicators only appear when hovering the cursor over the AOE. This keeps the visual noise to a minimum

You can see a little of it in action here (although the visuals are not finished yet)

The latest round of playtesting has revealed a couple of things we don’t like:

-

The pixel picking for the AOE handles works great, but it’s too easy to get handles stuck inside tiles. So we are looking at augmenting the AOE picking with something that feels more forgiving. I’m currently prototyping this and will be continuing tomorrow.

-

For rulers, it feels right to see the handles through walls. For AOEs, it rarely does. We need to change that behavior before release.

Group movement

As for me, I spent most of the last week staring at squiggly lines diagrams like these:

My goal has been working on a good way to divide them into simple polygons (see my last dev log for the overview of what we need this for).

I have an approach that is quick and works in many cases…

…but not all of them

The above gif shows two holes between the two polygons. Now, this isn’t uncommon. You are probably very familiar with what happens in graphics applications when you use lasso-select and self-intersect a bunch.

This behavior sucks for TaleSpire though. Actions like this should have 100% predictable behavior and trying to anticipate self-intersection behavior should be unnecessary.

This means I need to have another go at the polygon decomposition. However, what we have is plenty good enough for continuing with our experiments, so I’m going to leave it for later.

The next step is to write the ear clipping code that creates the mesh from the simple polygon. I’m back working on AOEs for now, but I think I’ll be done there soon.

Wrapping up

Well, that is probably the best place to leave it for now.

Hope you are having a great week. Hope to see you in the next dev-log.

Peace.

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team

TaleSpire Dev Log 349

Heya folks!

With AOEs in Beta, and Ree working on the visuals, I have turned to the next feature we want to ship: group-movement.

A good while back, Ree got this feature pretty much to the finish line. We were just missing a selection method that felt good. For our tests, we used the same rectangle section tool from the building tools.

As much as you lovely folks tried to tell us the selection would be fine, we are still very stubborn about the feel of TaleSpire, and so you’ve had to wait. More recently, however, we think we’ve figured out how to make a pixel-perfect lasso tool that updates in real-time. So we are currently working to find out if it’s correct. The general idea is that we can render the on-screen creatures to the picking buffer, reusing the depth buffer from the scene render. By setting the depth test set to “equals” and turning depth-write off, we early out of all hidden fragments (which helps with performance).

I’ve tested the rendering portion, and it’s doing what I expect, so I’ve turned to the lasso itself. We need to make a mesh that is like this…

…but filled in. This means triangulating an arbitrary polygon. Luckily there are techniques for this, such as ear clipping (the linked video is ace) but many only work on “simple” polygons. Simple, in this case, means no “holes” and no self-intersection. There are algorithms out there that can handle these complex cases, but after drawing a lot of squiggles in my notebook, I think Ι can make something more efficient[0]. That is my goal for the next few days. After that, it should be mostly copying and modifying existing code to get the results back from the GPU.

That’s all from me for now. I’ll be back when there is more to show :)

Ciao.

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team

[0] In the game, we are building the polygon incrementally over many frames and don’t have to handle “holes.” So I think a decomposition to simple polygons can also be spread over many frames. After that, the ear clipping approach can be applied.

TaleSpire Dev Log 348

Heya folks,

Work on AOE[0] feature has been going well. In fact, if all goes to plan, we should have a Beta in your hands by the weekend.

The goal for the Beta is to test the basic functionality. What we have is as follows:

- While using a ruler, a GM can press a key[1] to turn that ruler into an AOE

- Players and GMs can use the Tab key to view the names of the AOEs

- GMs can right-click on the handles of an AOE or bring up a menu that lets them delete or edit the AOE

- AOEs are saved in boards.

- They are also included in published boards.

For now, the visuals of the AOE are exactly the same as the rulers. This will probably change before the feature leaves Beta.

Yesterday was spent making a fast way to place and update the names above the AOEs. The result is a slightly generalized version of the code I use for bookmarks and creatures. Now that I have this, I’d like to go back and try replacing the creature and bookmark implementation with this new version, but that’s a task for another day.

As you can see, the names in the above videos are just dummy values. Today I’ll be adding the code for setting and changing names. I expect that to be a quick job so getting the Beta out by Saturday seems feasible.

I guess we’ll know soon enough!

Until next time,

Peace.

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team

[0] AOE = area of effect [1] The keybinding is configurable in settings

TaleSpire Dev Log 346

Hey again folks,

As planned today, I focussed on saving/loading and getting cone AOE to work correctly.

That worked.

Next up will be getting pixel picking working with the AOE handles. This will take a bit of work to do efficiently. I can’t just reuse the ruler code as that only needed to handle up to sixteen rulers at a time, whereas this should be made to handle thousands efficiently. I know what tools should allow me to do this, so I just need to find the form I like.

I’ll keep you posted :)

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

TaleSpire Dev Log 345

Hey folks,

With things calming down after the releases, I’m back working on area-of-effect markers again.

Today has gone well. I’ve been playing around getting the basics working and then focused on the serialization code later in the afternoon.

This is a bit early to show, but you can see something coming together:

Tomorrow I’ll be testing the serialization code and looking at some bug in the cone AOE.

After that, I’ll start on the radial menu stuff. The big task there is hooking it up to the pixel-picking system in an efficient way. I expect that to take a day or so.

Alright, that’s all for tonight.

Ciao

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

TaleSpire Dev Log 344

Heya folks. Today I’ve been working on the feature to allow region changing. The basics now work:

I’m still working on the code that handles what happens for players who are currently in the campaign. It technically works, but it’s ugly right now.

Seeya!

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

TaleSpire Dev Log 343

Polymorph beta and Dev stream coming this week

Hey folks,

This week polymorph is going to become available for testing. We are rolling this feature out differently than usual, so I thought it would be good to explain the process and why we are doing it.

Also, now that I have moved house, I finally have decent internet speeds again, so it’s time to bring back developer streams. Details are towards the end of this log.

Polymorph Beta

The short version is that we will be making polymorph available on a beta branch of TaleSpire so that the brave and wreckless can try it out and report bugs before we push it out to everyone else.

We’ve wanted to start doing this as it allows us to catch critical bugs without doing damage to existing creations.

-AND-

We also know that even though they are unsupported, there are folks out there hacking the game to make mods. We’d like to give them a bit of time to update their work to fix anything that isn’t compatible with changes to the game.

To this end, the polymorph beta will be opt-in and take place on an entirely different backend from the one you are currently playing on. And that backend will only last for the duration of the testing. That means that when we release the feature to everyone, the test backend will be shut down, and everything that was on that branch will be cleared.

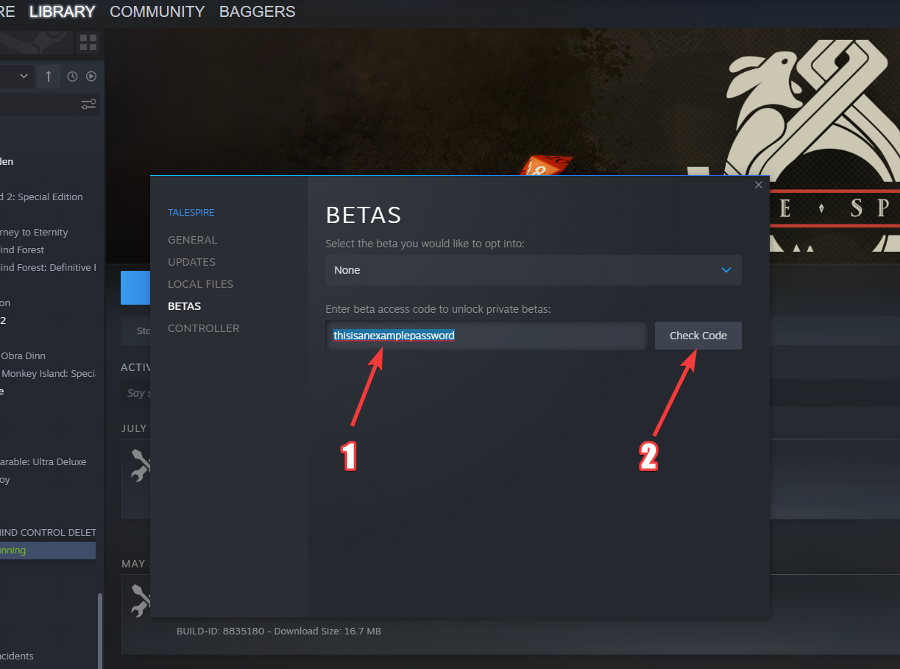

The process to opt-in will be something like this:

- You will open the Steam beta properties for TaleSpire

- You’ll enter a password we give out later this week

- You’ll be given the option to opt into the beta

- If you do, the game will update, and then you will be on our testing build and backend

Opting out is as simple as setting the beta back to “None.”

We’ll have full instructions and warnings out on the day.

Dev Stream

Yup, we are finally doing a dev stream again. We will be live on twitch.tv/bouncyrock this Thursday at 11pm Norway time (click the link above to see the time in your timezone.

This will be a general catchup. As usual, you’ll have the opportunity to fire questions at us too.

That’s all for today

That’s all the news for today, there has been plenty of code and art work going on, but we can save that for another dev-log.

Ciao!

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

TaleSpire Dev Log 342

Heya folks,

Last night I pushed a server update containing code needed for polymorph, HeroForge pack grouping, and tracking photon errors. Aside from one hiccup, it all went well.

The last few days have been a mix. My Sunday and Monday were mainly working on moving house stuff. I’ve got a little cabin thing I’m using as an office, so I dug a trench and laid conduit to get internet out there. That is working great, so I’ll need to work out my streaming setup again so we can do some catchups.

I’ve just merged a pile of polymorph work into master. It’s slowly getting more stable, although I still have some pressing bugs to fix before we can push it out.

I’ve got to head out now to get a vaccine for tick-borne encephalitis, as the area I’m in is pretty forest’y. When I get back, I’ll be working on HeroForge. I need to focus on the biggest pain points there so we can get it out of beta status. I’d like to get file hash validation done, and then I’ll see how far I can get on texture rescaling today[0].

The next week or so will still be a bit hectic for me (due to life stuff), but everything is going in a great direction.

See you around.

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] Currently, our assets file sizes are way bigger than they need to be due to working around a Unity bug where their texture quality setting breaks DXT textures. I’m hoping I can rescale the textures so that sizes at each mip level will be a multiple of four (required for DXT) regardless of the texture scaling their quality setting uses.

TaleSpire Dev Log 341

Heya folks.

I’ve now packed up my computer, ready for the move, but I did get some stuff done before then.

I’ve made the first pass at including groups in the HeroForge browser.

I’ve merged the polymorph UI branch into my dev branch and am currently working on bugs. Currently, I’m updating the code to push scale along with the morph data so that players get the correct result even if the asset hasn’t finished loading yet.

The scale work required back-end updates to make unique-creatures handle this correctly. That is done and is looking good so far in testing.

I think that’s the interesting stuff for now. I should be set up for work by Monday, so I’ll see you then.

Ciao

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team