From the Burrow

TaleSpire Dev Log 253

Evening all.

It’s been a reasonably productive day for me today:

- I fixed two bugs in the light layout code

- Fixed pasting of slabs from the beta [0]

- Fixed a bug in the shader setup code

- Enabled use of the low poly meshes in line-of-sight (LoS) and fog-of-war (FoW)

- Started writing the server-side portion of the text chat

I have not made any UI for chat in TaleSpire yet. My focus has been on being able to send messages to different groups of players. Currently supported are:

- all players in the campaign

- all players in the board

- specific player/s

- all gms

Next in chat, I need to look at attachments. These will let you send some information along with the text. Currently, planned attachment kinds are:

- a specific position in the board

- a particular creature in the board

- a dice roll

We will be expanding these, but they feel like a nice place to start and should help with the flow of play in play sessions.

We’ll show you more of the UI as it happens.

One cute thing I added for debugging is the ability to render using the low-poly occluder meshes instead of the high-poly ones. Here is an example of that in action. You can see that, in this example, the decimator has removed too much detail from the tiles. This is handy for debugging issues in FoW and LoS.

Alright, that’s all for today,

Seeya

[0] The new format is a little different, and I’ll publish it a bit closer to shipping this branch

TaleSpire Dev Log 252

Heya folks! Yesterday I got back from working over at Ree’s place, which was, as always, super productive.

Between us, we have:

- props merged in

- copy/paste working correctly with combinations of props/tiles together

- Fix parts of the UI to configure keybindings

- More work on the internationalization integration

- Progress on the tool hint UI and video tutorial system

- Updated all shaders to play nice with the new batching system

- Fixed some bugs in the math behind animations and layout of static colliders

- Rewritten the cut-volume rendering

- Fixed a bug that was stopping us spin up staging servers

- Generated low-poly meshes for use in shadows, line-of-sight, and fog-of-war

That last one is especially fun. Ree did a lot of excellent work in TaleWeaver hooking up Unity’s LOD mesh generator (IIRC, it was AutoLod). The goal is to allow creators to generate or provide the low poly mesh used for occlusion. This allows TaleSpire to reduce the number of polygons processed when updating line-of-sight, fog-of-war, or when rendering shadows.

Most of the above still need work, but they are in a good place. For example, the cut shader has a visual glitch where the cut region’s position lags behind the tile position during camera movement. However, that shouldn’t be too hard to wrangle into working.

Today I moved the rest of my dev setup to the new house. Lady luck decided it was going to well and put a crack in my windscreen, so I’m gonna have to get that replaced asap.

The next bug for me to look at is probably one resulting in lights being positioned incorrectly.

Anyhoo that’s the lot for tonight.

Seeya!

TaleSpire Dev Log 251

Heya peoples! Today was my first day back working after the Christmas break, and my goal was to add prop support for copy/paste.

Previously I was lazy when implementing copy/paste and stored the bounding box for every tile. This data was readily available in the board’s data, so copying it out was trivial, as was writing it back on paste. However, this is no good when artists need to change an existing asset (for example, to fix a mistake). Fixing this means looking up the dimensions and rotating them on paste, but that is perfectly reasonable.

Looking at this issue reminded me that we have the exact same problem with boards. As we are looking at this, we should probably fix this for board serialization too. This does make saving aboard much more CPU intensive, however. The beauty of the old approach was that we could just blit large chunks of data into the data to save; now, we have to transform some data on save. We will almost certainly need to jobify the serialize code soon[1].

The good news in both the board and slab formats, we will be removing 12 bytes of data per tile[0]. In fact, as we have to transform data when serializing the board, why not make the positions zone-local too. That means we can change the position from a float3 to a short3 and save an additional 6 bytes per tile[2]

A chunk of today was spent umm’ing and ah’ing over the above details and different options. I then got stuck into updating the board serialize code. Tomorrow will be a late start as I have an engineering installing internet at my new apartment at the beginning of the day. After that, I hope to get cracking on the rest of this.

Back soon with more updates.

Ciao

[0] sizeof(float3) => 12

[1] Or perhaps burst compile it and run it from the main thread.

[2] technically we only really need ceil(log((zoneSize * positionResolution), 2)) => ceil(log(16 * 100, 2)) => 11 bits for each position component, which would mean 33 bits instead of 48 for the position. However short3 is easier to work with so will be fine for now.

TaleSpire Dev Log 250

Heya folks, I’ve got a beer in hand, and the kitchen is full of the smell of pinnekjøtt, so now feels like an excellent time to write up work from the days before Christmas.

This last week my focus has been on moving (still) and props.

As mentioned before, I’ve been slowly moving things to my new place, but things like the sofa and bed wouldn’t fit in our little car, so the 18th was the day to move those. I rented a van, and we hoofed all the big stuff to the new place. The old flat is still where I’m coding from as we don’t have our own internet connection at the new place yet, so I’m traveling back and forth a bunch.

Anyhoo you are here for news on the game, and the props stuff has been going well. Ree merged all his experiments onto the main dev branch, and I’ve been hooking it into the board format. The experiments showed that the only big change to the per-placeable[0] data is how we handle rotation. For tiles, we only needed four points of rotation, but props need 24. We tested free rotation, but it didn’t feel as good a rotating in 15-degree steps.

The good news is that we had been using a byte for the rotation even before, so we had plenty of room for the new approach. We use 5 bits for rotation and have the other 3 bits available for flags.

I also wanted to store whether the placeable was a tile or prop in the board data as we need this when batching. Looking up the asset each time seemed wasteful. We don’t need this per tile, so we added it to the layouts. We again use part of a byte field and leave the remaining bits for flags. [1]

There are a few cases we need to care of:

- Tiles and Props having different origins

- Pasting slabs that contain placeables which the user does not have

- Changes to the size of the tiles or props

Let’s take these in order.

1 - Tiles and Props having different origins

All tiles use the bottom left corner as their origin. Props use their center of rotation. The board representation stores the AABB for the placeable, and so, when batching, we need to transform Tiles and Props differently.

2 - Pasting slabs that contain placeables which the user does not have

This is more likely to happen in the future when modding is prevalent, but we want to be able to handle this case somewhat gracefully. As the AABB depends on the kind of placeable, we need to fixup the AABBs once we have the correct asset pack. We do this in a job on the load of the board.

3 - Changes to the size of the tiles or props

This is a similar problem to #2. We need to handle changes to the tiles/props and do something reasonable when loading the boards. This one is something I’m still musing over.

Progress

With a couple of days of work, I started being able to place props and batch them correctly.

I spotted a bug in the colliders of some static placeables. I tracked down a mistake to TaleWeaver.

With static props looking like they were going in a great direction, I moved over to set up all doors, chests, hatches, etc., with the new Spaghet scripts. This took a while as the TaleWeaver script editor is in a shockingly buggy state right now. However, I was able to get them fixed up and back into TaleSpire. I saw that some of the items have some layout issues, so I guess I have more cases miscalculating the orientation. I’ll look at that after Christmas.

In all, this is going very well. It was a real joy to see how quickly all of Ree’s prop work could be hooked up to the existing system.

After the break, I will start by getting copy/paste to work with props, and then hopefully, we’ll just need to clear up some bugs and UI before it’s ready to be tested.

Hope you all have a lovely break.

God Jul, Merry Christmas, and peace to the lot of ya!

[0] A ‘placeable’ is a tile or a prop [1] I’m going to review this later as it may be that we need to look up the placeable’s data during batching anyway and so storing this doesn’t speed up anything.

TaleSpire Dev Log 249

Good-evening folks,

I’m happy to report that the new lighting system for tiles and props is working! We now have finally moved away from GameObjects for the board representation.

As it looks identical to the current lights, I’m not posting a clip, but I’m still happy that that bit is done[0]. In time, we will want to revisit this code as there is still more room for performance improvements. However, there are bigger fish to fry for now.

After finishing that, I squashed a simple bug in the physics API where I was normalizing a zero-length vector (woops :P).

Next, I’ll probably be looking at the data representation for props. I will be a bit distracted though, as I’m moving house and in the next few days, I need to get a lot done.

I think that’s all I have to report for today.

Seeya around :)

[0] Technically I still haven’t worked out Unity’s approach to tell if a camera is inside the volume of a spot light. This means my implementation is a little incorrect, but it’s not going to be an issue for a while.

TaleSpire Dev Log 248

Today I’ve been working on the new light system.

The basics of this are that I am porting our previous experiments allowing lights without gameobjects to our new branch and hooking it into the batching code. There are, of course, lots of details to work out when trying to make something shippable.

As usual, we need to think a little about performance. There are often many lights on screen, and each light in Unity takes a one draw call (when not casting shadows, which these don’t). Each frame, we need to write each light into a CommandBuffer[0]. With Unity’s approach to deferred lights, A light may be rendered with one of two shaders based on where the camera is inside the light’s volume or not. Two matrices need to be provided, and the light color seems to need to be gamma corrected[1]. As CommandBuffers can only be updated on the main thread, I want to do as little of the calculation there as possible. Instead, we will calculate these values in jobs we have handling batching, and then the only work on the main thread is to read these arrays and make the calls to the CommandBuffer. This fits well as the batching jobs already calculate the lights’ positions and have access to all the data needed to do the rest.

If all goes well, I hope to see some lights working tomorrow.

Seeya then.

[0] we need to use a command buffer as the light mesh has to be rendered at a specific point in the pipeline, specifically CameraEvent.AfterLighting

[1] This one was kind of interesting. When setting the intensity parameter of the light, the color passed to the shader by Unity changed. In my test, the original color was white, so the uploaded values were float4(1, 1, 1, 1), where the w component was the intensity. However, when I changed the intensity to 1.234, the value was float4(1.588157, 1.588157, 1.588157, 1.234). It’s not uncommon for color values to be premultiplied before upload (see premultiplied alpha), so I just made a guess that it might be gamma correction. To do this, we raise to the power of 2.2. One (white) raised to the power of anything will be 1. So instead, we try multiplying by the intensity and raising that to the power of 2.2. Doing that gives us 1.58815670083..etc bingo!

TaleSpire Dev Log 247

‘Allo all!

I’m on a high right now as I just fixed a bug thats been worrying us for a while.

TLDR: On our new tech branch of TaleSpire, physics is now stable at low framerates

Now for the director’s cut!

We have been working hard for a while on a big rewrite of the codebase, which gives significant performance improvements. Amongst the changes was a switch to Unity’s new physics engine (DotsPhysics). For the dynamic objects in the game, we still wanted to use GameObjects, so I made a wrapper around DotsPhysics, which made this feel very similar to the old system.

It’s been working well enough since the last batch of fixes; however, we had noticed that it got very unstable at low framerates. I was nervous about this as, if it wasn’t my fault, I’d have no idea how to fix it (Spoiler: It was totally my fault :P)

It couldn’t be avoided for much longer, though, so for the last couple of days, I’ve been looking into it. Yesterday was exceedingly painful. I read and re-read the integration code Unity use for their ECS, and simply couldn’t find anywhere where we should have been messing up. I tried a slew of things with no results. That day ended on a low note for sure.

Today however, I started fresh. For the sake of the explanation, let’s assume the code looks roughly like this:

Update()

{

RunPhysics();

}

RunPhysics()

{

LoadDataIntoPhysicsEngine();

while(haveMoreStepsToRun)

{

RunPhysicsStep();

}

ReadDataBackOutOfPhysicsEngine();

}

I had noticed yesterday that the first physics step of every frame worked fine. So I knew it had to be related to how I was handling the fixed timestep.

So I changed the code to look like this.

Update()

{

RunPhysics();

RunPhysics();

}

RunPhysics()

{

LoadDataIntoPhysicsEngine();

// while(haveMoreStepsToRun)

{

RunPhysicsStep();

}

ReadDataBackOutOfPhysicsEngine();

}

And low and behold, it was still stable. The speed was totally incorrect, of course, but it was clear that RunPhysicsStep was missing something that was handled in the setup or teardown code.

I made small change after small change and finally was able to isolate the issue. One big difference between my code and how Unity was doing things was that they read out the data from the physics engine at the end of each step and then read it back in at the start of each step. This is desirable for them, but it was not something I was doing as for our use-case, it was just overhead. However, what I had forgotten was that when we load the data into the physics engine’s data structures, there are two places you have to put the transform for the bodies being simulated. After each physics step, only one has the updated transform[0], so I needed to make sure that the transform was written back to the other.

And that was it! Suddenly the simulation is solid as a rock at 20fps, and I could breathe a sigh of relief.

I’m now going to do some performance tests again, tweak my fixed-timestep implementation, and then move on to rewriting our board representation to use our new light implementation.

Hope you have a great day, Seeya!

[0] This makes sense when you look at it and is not an issue with how Unity made this.

TaleSpire Dev Log 246

Hey again folks.

Progress has been good on the new mesher for the fog of war. The new mesh is much more regular, which should help if we use vertex animation on the mesh.

In the clip below, you will spot two significant issues (Ignoring the shader as we haven’t started on that yet):

- The seams in the fog at the edges of zones (16x16x16 world-space chunks)

- The lighting seems weird

The lighting oddness is just that, for now, all the normals are set to straight up. I’m going to make them per-face after this. I could compute the normal per-vertex, but as that slightly more work, I’m holding off until we have some ideas about the visuals.

The seams are an artifact of marching cubes and keeping zones separate from each other. As much as possible, we want to keep zones independent from other zones, and in this case, it means we don’t know if the neighboring zones have fog or not. This, in turn, means we assume there is none so that marching-cubes generates a face. However, marching-cubes can’t do sharp corners, so you get this chamfer.

I’m not sure if we can fix this by modifying the geometry generated on the edges. We’ll have to see.

This morning I was fighting with Unity, trying to get it not to look for things to cull in cases when we are handling the culling. Without moving to their new “scriptable rendering pipelines” (SRP), there doesn’t seem to be a way to do it.

I will also look at the shadow culling jobs as I think the overhead from dispatching them might be larger than the time the job is taking [0]. In that case, I could Burst compile the culling methods and call them from the main thread instead. It’s a trade-off, but it might work.

That’s all for now. Hopefully, I’ll be back tomorrow with some new data :)

Peace.

[0] The profiler seems to suggest this is happening, but I’m not sure how much of the overhead is avoidable and how much is just part of the BatchRendererGroup’s own code.

TaleSpire Dev Log 245

Hi folks. I’ve been a bit quiet recently for a couple of reasons, but I doubt they are that intriguing, so do feel free to skip this next paragraph.

The first reason is easy to explain, I’m moving house, which has been stealing my time. The second reason is more personal. I go through slow swings between needing to produce and needing to absorb content. Recently I switched over to the ‘absorb’ phase, but stupidly I didn’t notice, which meant I was getting very frustrated with my productivity. I don’t know why this blindsided me as it’s happened a fair few times before, but that’s for me to muse over, I guess. The upshot of this was I didn’t want to hang out much as I was not happy with what I was producing. However, this last week, I’ve been coming back out of that funk, and work has been going very well.

Three days ago, I finally got the new cubemap capture system working. This had a lot of false starts as I explored implementing it with CommandBuffers. We needed a custom approach for this because we handle all the culling and rendering ourselves. At first, I thought we could use a replacement shader and the that BatchRendererGroups would render to the camera, but of course, we had set those up in ShadowsOnly mode, so that didn’t work. A while back, Ree and I experimented with shadow-only shaders, which could be overridden with replacement shaders; however, the performance hit was significant.

All this meant I needed to write a culling compute shader to fill per-cube-face batches and then to dispatch them. It took a couple of tries to get something I was happy with. What I have should be adequate for rendering the limited regions we need for the character vision.

With that working, I merged my old fog-of-war (FoW) experiments and got them working with the new system. It’s not networked synced yet, but that is on the todo list soon.

Over the last couple of days, I’ve been rewriting the line-of-sight (LoS) system to use the cubemap capture system results. The cubemaps now only store the distance to the nearest occluder, and creatures are not occluders. This means you can now see a gnome behind a giant, for example. It also means creatures don’t block FoW reveal, which was a bit annoying in my experience.

The way our LoS works is to render all creatures to a cubemap using a shader that discards all the fragments. This sounds pointless, but what we do instead is to compute the direction from the camera to the fragment and use that direction to look up the distance to the nearest occluder from the cubemap made by the capture system. We can then see if the creature fragment is closer to the camera than the occluder and if so, we record that creature’s id in a buffer. We use a compute shader to collate that buffer’s contents, and we read it back to the CPU-side asynchronously. What we end up with is a buffer of visible creature ids.

The cool thing with the new system is that we have separated the LoS step from the view cubemap capture. This lets us recompute the LoS multiple times without having to update the view. This happens a lot when the GM or other players are moving their creatures, but yours is stationary.

As a 512x512 cubemap is not an insignificant amount of GPU-memory, I experimented with different schemes of when to free the cubemaps. There are a few tradeoffs:

-

Capturing the view is relatively expensive, so you want to do it as infrequently as possible. Ideally, only when the board changes or when the creature, whose view it is, moves.

-

If a GM right clicks to check a specific creature’s LoS, it’s highly likely they will do it again. So we should keep the data around

-

However, it’s also likely that they will check the LoS of a few different creatures. So we have a risk of making too many cubemaps

The approach I have now will hand out the cubemaps but, assuming they don’t become invalid for other reasons, they will expire after some time. Also, if we have more than sixteen cubemaps alive, the manager will mark them for disposal. The amount of time given is less for creatures who are not members of a party[0].

There are a few other details, but that was the meat of it :)

I am now writing a new mesher for the FoW. The Minecraft style mesher we have been using was fine, but it almost does too good a job at reducing the polycount. To experiment with the visuals, we want a finer mesh. As marching-cubes is easy to implement, I’m going to use that for now[1].

I should have that done tomorrow.

Seeya then!

[0] Parties are a concept that will be getting a lot of attention before the Early Access release. LoS and FoW are both party-wide, so you will see what other party members see. I’ll talk more about this as we implement it.

[1] Surface-nets would probably be another good option.

TaleSpire Dev Log 243

Hi!

On Sunday, I was reading about Unity CommandBuffers as I need to rewrite the code that handles the cutaway effect. At the bottom of the page was a link to this blog post where something caught my eye. They were talking about custom deferred lights. This was exciting as lights are the one place where tiles still need to use Unity GameObjects.

In our quest to improve TaleSpire’s performance, GameObjects have long been our nemesis. They are (relatively) slow to spawn, use managed memory, and only interactable from the main thread. To avoid lag, we have to spread light spawning over multiple frames and work pretty hard to apply updated transforms to the GameObjects from the Spaghet scripts that can modify them.

If we had a way to draw lights without GameObjects, we could significantly simplify a lot of code. The temptation was too great, so I’ve spent the whole day working out how to do this.

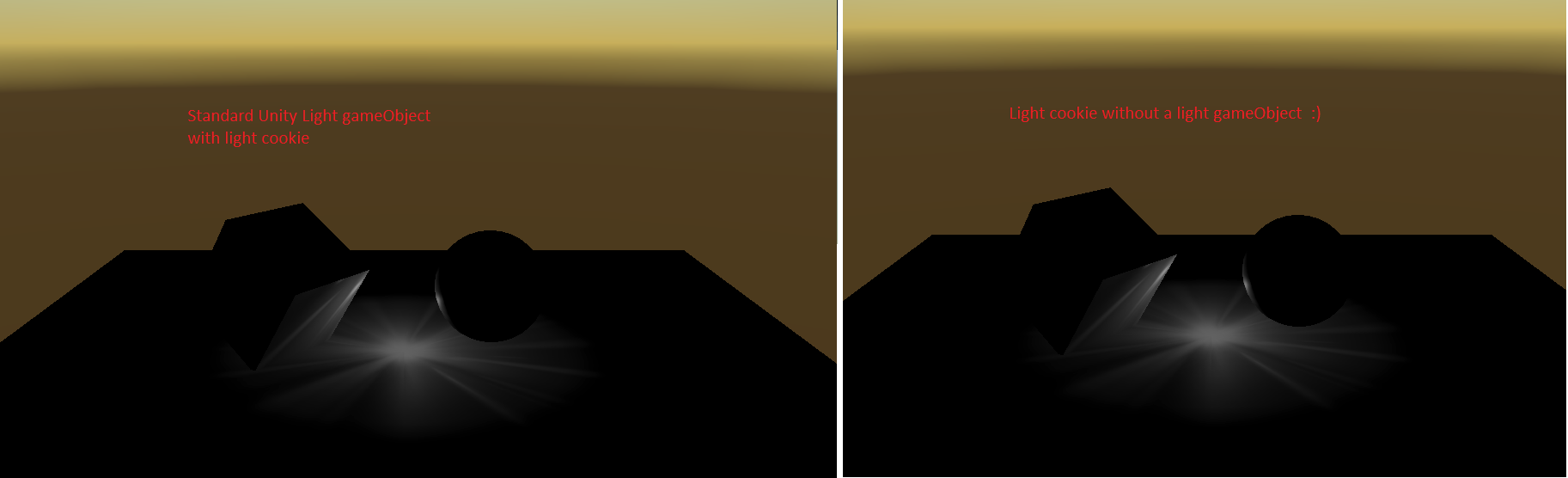

The good news is that it worked. Here is an ugly test.

We use a standard Unity light on the left, and on the right, the new approach. They should look the same. The reason for the pattern in the light is that I knew that we needed to support light cookies, and so I included that in the test. The non-cookie case should be more straightforward.

Ok, back to the blog. The author has included a fantastic example project, which was the first concrete proof that this should be possible. I had one extra complicating factor; however, I didn’t want our lights to look any different from the Unity ones. To me, this meant trying to drive the built-in Unity Shaders somehow. Due to my inexperience with this, I had a bunch of false starts, but I eventually understood the general flow of the rendering process. There is a good, though terse, a summary of the approach here.

Things were looking promising but, try as I might, I could never get the built-in shaders to have the ZWrite or Stencil settings I needed. After many failed experiments, I simply copied the bits I needed into a separate shader to apply the flags myself. This process was easier said than done, and a lot of time was spent in the frame-debugger.

It’s all looking very promising, but there is still time for it to all go wrong :P

The next step is to make sure this works with multiple lights. Then I can extend this to support spot-lights and more of the standard point light settings. Once those are done, we will change how TaleWeaver packs light data, and then finally, we can refactor the light batch in TaleSpire to use this new system. I wonder if we can get some idea of performance without doing all of this… we shall see.

Alright, that’s all for today. Seeya around!