From the Burrow

TaleSpire Dev Log 289

Well, naturally, the biggest excitement in my day has been seeing the Dimension20 trailer go public, but code is also progressing, so I should talk about that.

Buuuuut I could watch it one more time :D

RIGHT! Now to business.

I started by looking into markers. Oh, actually, one little detail, soon you will be able to give markers names, at which point they are known as bookmarks. I’m gonna use those names below, so I thought I should mention that first.

Currently, markers are pulled when you join a specific board, and we only pull the markers for that board. To support campaign-wide bookmark search, we want to pull all of them when you join the campaign and then keep them up to date. This is similar to what we do for unique creatures, so I started reading that code to see how it worked.

What I found was that the unique creature sync code had some legacy cruft and was pulling far more than it needed to. As I was revisiting this code, it felt like time for a bit of a cleanup, so I got busy doing that.

As I was doing that, it gave me a good opportunity to add the backend data for links, which soon will allow you to associate an URL with creatures and markers. So I got stuck in with that too.

Because I was looking at links, it just felt right to think about the upcoming talespire://goto/ links, which will allow you to add a hyperlink to a web page that will open TaleSpire and take you to a specific marker (switching to the correct campaign and board in the process). After thinking about what the first version should be, I added this into the mix.

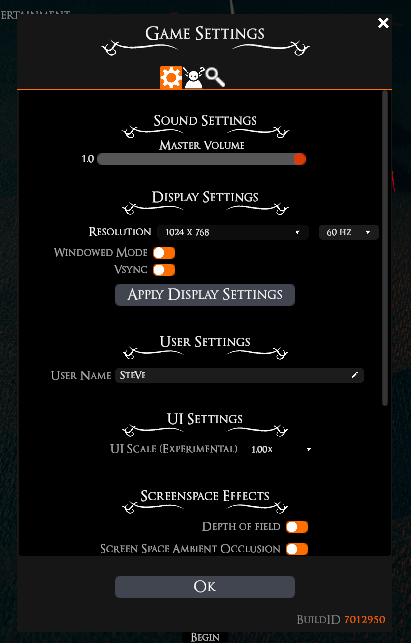

So now things are getting exciting. I’ve got a first iteration of the board-panel made for gms…

NOTE: I’ll be adding bookmark search soon

We can add names to markers to turn them into bookmarks. And you can get a talespire://goto link from their right-click menu.

I’ve got switching between campaigns working, but I need to do some cleanup on the “login screen to campaign” transition before I can wire everything up.

TLDR:

It would be great to ship all this late next week, but I’m not sure if that’s overly optimistic. We’ll see how the rest of it (and the testing) goes.

Until next time, Peace.

TaleSpire Dev Log 287

Hellooooo!

It’s time for another dev log, and things are moving along well.

Since the last patch, I’ve fixed touched a few things.

Links in and out of boards

We are working on two new and complementary features. The first is a talespire:// URL that takes you to a specific position within a board, and the second, which allows you to attach a URL to creatures and markers.

Between these, you could do things like include links into boards from campaign management software like WorldAnvil, or link directly from your creature to their D&DBeyond page.

I’m currently working on the database, backend, and TaleSpire patches to make this work.

Download validation

We have hashes of all board and creature data, but we hadn’t been using it until now. We now hash the data of downloads and use that to validate the download result. This should reduce the cases we’ve seen of invalid cache files.

Pixel-perfect camera focus

Moving the camera using double right-click is incredibly common; however, it used to only use physics ray-casts to work out where to move to. This didn’t work well in cases like the portcullis, where the collider definitely should be a single cuboid, but that doesn’t let you pick through the gaps.

I tried using the depth information from the pixel picker to get the position, but the accuracy was too low.

The new approach is a hybrid. We use the pixel picker to identify the thing under the cursor, and then we cast a ray only against the colliders in that object. This gives us the expected result and will be in the next patch.

Network Tester

We have some users with issues connecting to the game. To help with these cases, I’m updating a little tool we made to test and log the connection process. I might end up shipping this with the game or maybe build it into the game and using command-line arguments to switch to the tester on launch.

Performance improvement

I also just have to shout out some work Ree did the other day. To get shadows with reasonable performance in TaleSpire, we misuse BatchRendererGroups. Due to them not being designed to work with Unity’s built-in rendering pipeline[0], they seem to have a bug where the layer information is not respected. In practice, this means that we end up running culling routines for tiles and props even when the camera has specified that it doesn’t need to include those assets (using layers). Because of this, culling code was running whenever the reflection probe was updating, even though only a couple of objects were being rendered.

Ree made a replacement probe that totally avoids this bug. It’s really cool as the bigger the board, the more time this can save.

This will be shipping in the next patch.

And the rest

As always, there are bugs to fix. One that jumps to mind was that, since the last patch, creatures can be unnamed. In those cases, the name displayed should be the name of the kind of creature. However, that wasn’t happening.

That’s all from me. Of course, the rest of the team is plowing ahead with all sorts of exciting things. It’s gonna be great to see those land :)

Have a good one folks

[0] We did not know about this limitation when we started as the documentation was very lacking.

TaleSpire Dev Log 286

Hi everyone!

First, to business!

Server Maintenance

At 3am PT, we will be taking down the servers for maintenance (click here for the time in your timezone).

We are scheduling one hour for the work, but it is likely to be less than that.

This patch will complete the changes we needed to make on the backend for upcoming creature features.

Dev-log

Today has gone well

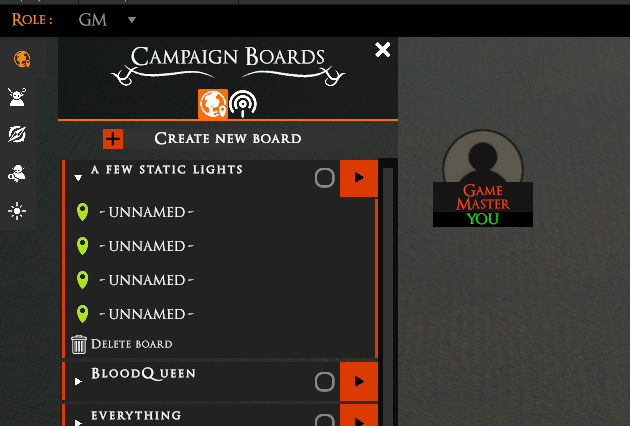

I’ve added a way to change your TaleSpire username…

…and a button to rename campaigns is also complete.

Both of those will be in the next patch to the game.

Ree and I spent a bunch of time testing the current TaleSpire build with the upcoming backend patch. So that should go smoothly. We rediscovered a couple of existing bugs in the process, so we’ll try to get some fixes for those in too.

I’ve also been doing some experiments with erlang so that, hopefully, more of the server updates in the future can be done with zero downtime. We’ll see how that goes :)

I think that is everything. Doing updates properly is slow as hell, but it’s fun to be getting closer.

Have a good one folks.

TaleSpire Dev Log 285

Here is a fun little tweak that is coming in an update soon.

In TaleSpire, we love the tile-based approach to things. We also love what can be achieved with clipping but, due to tiles have similar sizes, it’s easy to cause z-fighting.

Yesterday, Ree suggested I try to add a tiny offset to the positions, so they were less likely to line up. But there is a caveat… we want the offset for a given tile to be the same every time you load. The reason for that is that it would suck if each time you joined the board, things looked very slightly different.

Tiles and props don’t have unique IDs (as that would be way too much data), and we can’t use their index in the arrays (as that changes when boards are modified), but we do have their position.

The position isn’t necessarily unique, though, but when batching, we also know the UUID that identifies the ‘kind’ of thing being batched. This is ideal, so we mix some bits from the id, some bits from the position, feed it through a bodged noise function, and scale the result waaaay down.

This tiny offset is stable and is enough to improve z-fighting in a bunch of cases.

The eagle-eyed among you will notice that this doesn’t fix cases where two of the same kind of asset exactly overlap. This is correct and is not something we are looking to improve as it’s not a useful tile configuration anyway.

So that’s that. It’s a blast to occasionally get these little things where a ten minute experiment can give such a cool result.

Aside from this, I’ve also pushed a patch to the database, which allows us to store data for a bunch of upcoming creature features (polymorph and persistent emotes among them).

Hope you are having a good day.

Peace.

TaleSpire Dev Log 284

Hi folks!

The last few days of works have felt really good. I’ve been back in the flow as I have got through the ‘working things out’ stage.

The first thing I did was write the serialization code for the new creature data. With this done, I could hook up the code to upgrade from the old format, refactor a whole bunch of stuff and get creature saving to the backend again[0].

With that taking shape, I switched to the backend.[1]

We have an API description as erlang data, and we use that to generate both the erlang and c# code for communicating with the server. I extended this generator so it can make c# structs as previously it only made classes[2]. I also improved the serializer on the erlang side, so it needed a little less hand-holding.

I then used the generator as I defined the new API for creating and updating unique creatures.

My old code for updating unique creatures was lazy/expedient (you decide :P). I previously pushed all of a creature’s data to the server on any change. This was obviously much more data than was needed in almost all cases, but it worked. This time I made many more entry points, each of which applied smaller changes. This will reduce work for the server and hopefully make things a little faster.

With a draft of the API, I could generate the c# code and then add it to TaleSpire to see if I had missed anything. Once I was satisfied with that, I head back to erlang to implement the server code.

While working with the database, I hit an error when calling a SQL function where one of the arguments took a user defined type. It turned out that epgsql, the library I use, didn’t support auto-conversion of erlang data to custom SQL types. They did, of course, have a way for you to add your own ‘codec’ to do this, so it was time for me to learn about that.

The manual was helpful but didn’t provide concrete examples for what I was trying to do. I read the codecs included with the library, and that helped me to draft out the basics. What is nice is that you get to write and read the binary data directly, and erlang’s binary pattern matching is wondeful[3], so I didn’t worry about that.

What was not clear from the existing codecs was how to pass data for a user-defined type rather than a built-in one. I first tried just sending the data for the two fields of the type, but I got an error along the lines of “-34234324 columns specified, 2 expected”, which told me I needed to pass the number of columns, and that it knew what I was trying to send. A bit of googling led me to the source code for the part of postgres throwing this error. What was great was that at line 520, we can see that it reads a four-byte int to get the number of columns. We can then see exactly what else we need to provide, the most interesting of which is the type-oid for each field we are sending.

At first, I just muddled through as the errors I got told me which type-oids it was expecting[4]. However, this felt very fragile, so I jumped back into the code for the library to see what I was meant to do[5]. What was lovely was that on the init of your codec, they pass you an object you can use to query the type-db for your connection. This let me cache the type ids on init, which was ace.

As I didn’t see an example of this anywhere else, I’ve put up this gist of what I came up with. Maybe it can help someone, or perhaps someone will correct me and show me how it’s done :)

With that hurdled officially jumped, I got back to the slog of writing and testing the code.

I finally got unique-creatures working again[6], so it was time to start work on how we will upgrade to this new code. This involved the following SQL code:

- Code to apply the changes to the DB

- Code to initialize all the new columns with data from the existing columns (where sensible)

- Make the old unique-creature SQL code forward compatible. This will let you keep playing on an older version without the new and old data going out of sync.

With that DB patch looking promising, it was time to test on my local setup. This means:

- Starting a build of the old DB and server

- Building some stuff and placing some creatures in TaleSpire

- Applying the SQL patch

- Checking that nothing has broken

- Shutting down TaleSpire and the server but leaving the DB and files up.

- Switching TaleSpire and server to the new branches

- Starting up the new TaleSpire and server builds.

- Checking that everything still works

This is now working well, so I’m feeling pretty happy. I still need to finalize some details with Ree when I meet up with him[7], but maybe we can push the DB patch next week. This gives us what we need to progress on things like polymorph, persistent emotes, 8 stats per creature, and more.

Right, I should get some sleep.

Have a good one!

[0] All of this has been done on a local build of our backend, so I wasn’t risking messing stuff up.

[1] Uniques creatures are stored in the DB instead of being packed with the non-uniques in a file. So to change what data is stored for creatures means changing both places.

[2] In some cases, it’s nice not to be allocating another object.

[3] Seriously - Low-level languages need to steal it. There is some general erlang’y ugliness to it, but the idea is excellent.

[4] I also verified them using select typname, typelem from pg_type

[5] Erlang, like many venerable languages, grew up before the modern culture around documentation. Common-lisp felt very similar, and you have to get decent at reading other people’s code to learn how to use many things.

[6] with a few hacks in TaleSpire as I haven’t finished the polymorph code yet.

[7] We have to nail down the data we need for persistent emotes.

TaleSpire Dev Log 283

Hi again folks. I’m just dropping in to give an update on my last few days of work.

My current work is focused on changes to support upcoming improvements to creatures. These include polymorph, eight stats per creature, and persistent emotes.

Creatures are a pain to change as unique creatures are stored in the database, whereas non-uniques are stored in a binary format per board [0].

Beyond that, polymorph means we are storing up to ten asset-id and scales per creature. When creature copy/paste eventually lands, it will make it easier to unnecessarily bloat the non-unique data. Because of that, I’m now aggregating the ids into an array and storing indices into that array [1].

Given that we are having to make this new format, it gets very tempting to make other changes too. I spent some time playing around with some ideas here but decided against doing anything more dramatic for now.

Polymorph is one of those features that touches a lot of code. Up until now a creature doesn’t change its visual once it has been spawned. This assumption is pretty baked into the creature code, so changing this is more fiddly than it might seem. Currently, I’m writing the server code for adding/updating the new creature data, but after that, I’ll have to bite the bullet and update that part of the client-side code.

If all goes well, I’ll be visiting Ree next week to work on the game in person. It’s been quite a while, so that’s pretty exciting.

Have a good one!

[0] The uniques being in the database makes it easy to search across the entire campaign. The format for non-uniques is more efficient for bulk updates. [1] As an example, let’s say you took a creature with three morphs and used copy/paste to make an army of 50 of them. In that case, the naive approach would take 2400 bytes to store the asset-ids. The aggregate approach would use 148 bytes.

TaleSpire Dev Log 282

Heya folks. For the last five days or so, I’ve kept saying to myself that i’d write the next dev-log “as soon as I finish this task,” aaaand here we are :P

So let’s dig into some of the stuff I’ve been poking at.

Feature-Request Site

The #feature-requests channel on our discord has been incredibly useful. Still, a while ago, we gained enough of you lovely folks that we’ve outgrown it as a viable solution for managing feature requests.

Since then, the moderators have done a ton of work exploring options we have for moving that somewhere more appropriate. We are not opening it today, but we’ll soon be moving it and the roadmap over to HelloNext.

We’ve going through every request from talespire.com/faq and the last feature roundup and entering them into that system.

We are not ready yet, but we will put the #feature-request channel in read-only mode when we are. This way, nothing is lost, but new folks won’t be confused about where to post.

We will have more news about this in the coming weeks.

Bookmarks

Back in the code, I’ve been experimenting with bookmarks. Bookmarks are markers that have been given a name. We want you to be able to easily search and jump to any bookmark in the campaign.

I started with making a basic panel to hold the bookmarks so we could start experimenting with behavior. In the pic below, neither the logic nor the graphics are final. But you can see I was messing around with how to visualize bookmarks in the current board.

From this, we decided that the bookmarks should be integrated into the boards panel, with the boards acting like folders. You can see the WIP of this in these images.

This is going well. The next step is to add some new functionality to the server to support things like campaign-wide bookmark search.

Internal systems documentation

Along with this, we’ve also needed to start looking at housekeeping.

We have a growing codebase, and to keep things running smoothly, we need both Ree and I to be able to drop in easily and get working. To help with this, we are making some internal documentation.

While doing this, I’ve found stuff that I’m reluctant to document as they require some cleanups. Some of which have been on my todo list since the release.

To that end, I finally dove back into the serialization code and got the API to a nice place. This touched a large amount of code and so that it took a couple of days to update everything and get it passing all the tests again. We really don’t want to start breaking boards at this point.

From now on, I’m going to try to put aside one day a week where I only work on things that benefit us behind the scenes.

To get good performance, we have had to make alternatives to some of Unity’s systems, and our versions lack the visuals and tools that make working with them enjoyable. Focusing here will make our lives easier and thus make it easier to get fixes and features to you.

NDI

One day last week, I needed a change of scene, so I decided to look back into ndi support. I was hopeful that my knowledge of Unity has increased since last time, and I thought I could get it closer to shippable.

Alas, the plugin now requires .NET Standard 2.0, and for various reasons, TaleSpire uses .Net Framework 4.* (Damn Microsoft’s naming schemes to the pits of hell). Switching TaleSpire to .NET Standard 2.0 broke several things[0], so suddenly, this task stopped being a nice way to unwind.

This will have to wait for another day.

Unity on Linux [WARNING: NOT OFFICIAL SUPPORT - PLEASE READ BELOW]

Multi-platform support is NOT coming yet. But it is our long-term goal, and when it does, we want all your mods to work there too. Part of this means getting an understanding of how Unity’s AssetBundle format works on these platforms.

Also, I prefer working on Ubuntu, so it was a great time to try out the Linux port of Unity.

The tests went well. I was able to get a rather broken version of TaleSpire running on Ubuntu and prove that we can use AssetBundles that were made for Windows in the Linux build (because of how we handle shaders internally). Next, we’ll have to do a similar test on macOS and see how it behaves.

There is still a lot of work to do to make the build work properly. I’ll mention just two for now.

We use Window’s ‘named pipes’ feature to handle custom URL schemes. We will need to make something similar. This was a real pain to get working on Windows, so I expect no less elsewhere :P

The pixel-picking system makes use of a custom c++ plugin we made to be able to get results back from the GPU without hangs. We will need to write variants of this for each platform.

Now, I’m sure a few of you have been yelling at the screen to just use wine/proton to support Linux, and we are well aware of these. TaleSpire seems to work great out of the box with Proton, except for the custom URL scheme. This, too, can be fixed, but we’d want to fix that wrinkle in the experience before we’d consider supporting it.

Also, we simply aren’t ready to take on the extra work that supporting multiple platforms requires. We will get there, but it’s something for another day. For now, I’m excited to see the path ahead.

Bugs

As usual, there are also bugs to be looked at. We still have cases of board corruption being reported, and so these take top priority. Not all of them turn out to be corruption, but they still are worth squashing.

I think that’s most of it

We naturally have plenty of other things brewing. This post was just my stuff, and the rest of the team has been just as busy. We also have things cooking that we aren’t ready to talk publicly about. It’s gonna be fun :)

Until next time folks,

Have a good one!

[0] Including the custom URL scheme and some HTTP connection stuff

Talespire Dev Log 281

layout: post title: TaleSpire Dev Log 281 description: date: 2021-06-23 10:09:09 category: tags: [‘Bouncyrock’, ‘TaleSpire’] —

Hi folks.

At the time of writing, I’m about to push an update. In the changelog, there is a line that looks like this:

This patch also contains a change that will hopefully improve performance for machines with high numbers of cores.

I wanted to talk about this change as I think it will be interesting (and maybe counterintuitive) to some non-programmers out there.

Here is the change: I’ve told TaleSpire to use fewer cores.

!(dun Dun DUUUUUUUUn)[/assets/videos/gopher.gif]

Let’s set things up so I can explain why.

So TaleSpire uses a job-system. A job-system (in this case) is something that you give a chunk of code to run, and it runs it on one of your machine’s cores[0].

This is good as we can queue up lots of work and let the job-system work out where and when to run that code. This often gives us nice performance improvements as we are using all the cores on our machine.

As a programmer, your job then involves writing code in such a way that it can be run concurrently. For example, let’s say you work in catering and you need to make 20 of a certain kind of sandwich, and you have 5 workers. In this case, the ‘job’ is making one sandwich, and with a bit of orchestration, we can have all 5 workers making sandwiches concurrently.

Recently I upgraded my PC to keep up with how TaleSpire is growing[1]. I was very lucky to get a Threadripper CPU with 24 cores[2] which you would think would make TaleSpire much faster, but in some places, it didn’t. In this next image, you can see that running the physics got slower.

!(overhead)[/assets/images/overhead.png]

Weird right?! The version running on more cores took over three times longer.

Why? The overhead of orchestration.

Let’s imagine our sandwich situation again, but let’s say we broke down the steps of making a sandwich even further. So one job is to fetch the bread, another job is to butter the bread, another job is to bring the filling, etc. Now, imagine we still need to make 20 sandwiches, but we have 100 workers. As you can imagine, things get messy.

Not only does instructing each worker take time, but the workers also share access to limited resources (like access to the one fridge). Furthermore, the workers need to sync up to actually assemble the sandwich, and this coordination also takes time.

This is a long way of saying that, at some point, the overheads of managing workers can outweigh the benefits of having more. And so we come right back round to the physics situation.

I like that we can spread the physics work over multiple cores, but I need to limit how many so we don’t get lost in the overhead. As I don’t yet know the right way to restrict the worker count just for the physics, I’ve temporarily lowered the max worker count.

This will be increased again when I understand how to control this stuff for each system I care about.

That’s all I’ve got for this post. Thanks for stopping by, and I hope you have a great day.

[0] This is an approximate description. We could say it runs it on one of the worker threads. But given that they are locked with affinity, and we don’t want to have to explain software threads, this will do for now. [1] I run dev builds of TaleSpire, which gives more information to me, but the cost is that these builds run much slower. [2] With hyperthreading, that’s 48 logical processors

TaleSpire Dev Log 280

Heya folks,

We are currently working on the last things before we’ll be ready to ship the ‘props on bases’ feature. This is a super exciting one, and I expect it to be used and abused in very interesting ways.

Last night, however, I took a little detour to look at a bug that has been around since the chimera build. Lights that turn off when you get close to them.

Our lights attempt to be the same as the standard Unity lights, except without using Unity’s GameObjects for performance reasons. This means that, like Unity’s lights, ours use a different material depending on whether the camera is inside or outside the light’s area of influence[0].

The lights seemed to be turning off because we were not always updating the material at the right time. Let’s get into the weeds a little.

To avoid using GameObjects, we use CommandBuffers to render the light meshes at the right point during the frame. For dynamic lights[1] we have to rebuild the queue each frame[2] as the light’s position or properties are being changed, but for static lights, it’s different. Static lights, by definition, aren’t changing, so we have a separate CommandBuffer for them which we only update when the board is modified.

An important detail here is that you can’t just update an element of the CommandBuffer. It has to be cleared and rebuilt

This approach has a very dumb mistake in it, though. When the camera moves, the material the light is using might need to be changed. I’m not sure why I didn’t notice that while writing the system, though I expect I was rushing for Early Access and forgot. Regardless, this needed fixing.

Internally the TaleSpire board is split into zones, and we apply operations across these zones in parallel. Each zone communicates with the light-manager to enqueue its lights into the CommandBuffers of lights to be rendered[3]. What I tried to do was have one CommandBuffer for static-lights per zone and then only update them dependent on the camera position[4].

This worked, but I noticed something annoying when I looked at the profiler. The update time for the static lights was fine, but the rendering took a significant hit.

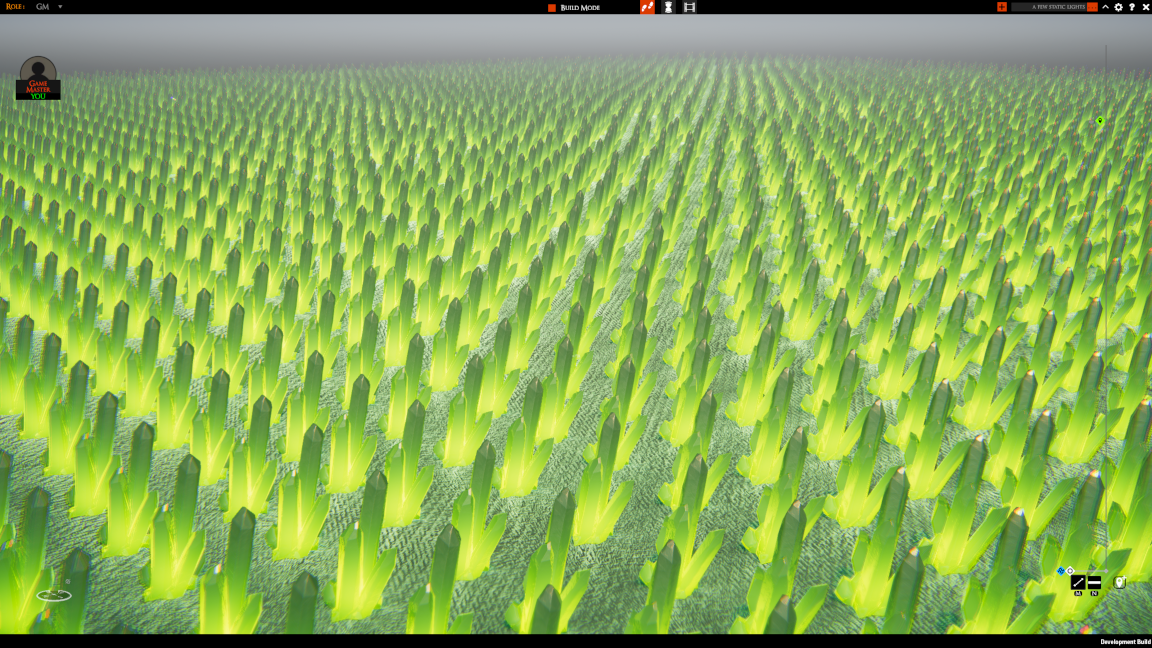

This is my test scene. It’s 4096 static lights. I’ve not added anything else so as not to confuse things.

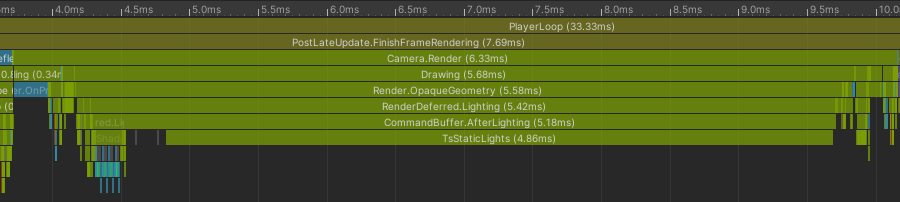

Here is what we have for light rendering before the fix (so with one CommandBuffer for static lights):

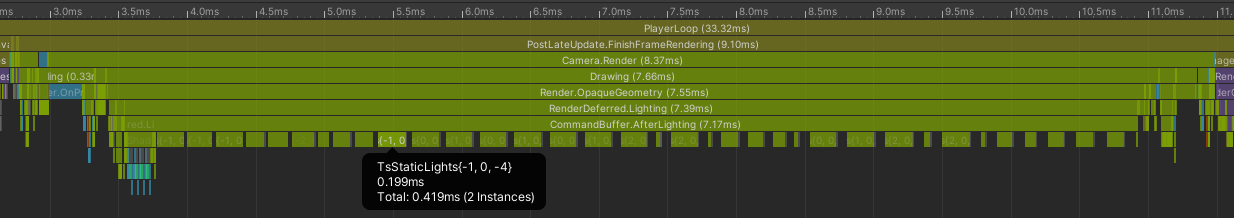

And here is the same scene, same camera angle, etc, but with one CommandBuffer per zone:

Doesn’t that suck? Even though the amount of work to do is the same, the overhead from many CommandBuffers made things take significantly longer[5].

I still hope to ship the fix for the lighting bug this week, but I’m going to have to look at it after we ship the ‘props on bases’ feature, as it’s clear I’ve got more experiments to do.

There is still plenty to try. What I’ll probably start with is switching to three CommandBuffers. One for dynamic lights, one for static lights from zones that have had to update recently, and a final one for static lights from zones that haven’t been updated in a while. This way, we minimize the overhead from CommandBuffers while also minimizing the number of lights being rewritten to the CommandBuffer each frame.

Alright, that’s all for now. Can’t wait to be back with more.

This is gonna be a fun week.

Seeya!

[0] More or less. This is close enough to be able for this discussion. [1] Dynamic lights are lights being moved or animated by scripts on the tile/prop [2] Yup, there are places we can optimize here, but this log skims over that detail as we are focused on static lights [3] This isn’t the exact architecture, so don’t sweat these details. We just want to talk about the issues. [4] Of course, this is really about the camera position in relation to the area influenced by any of the lights in the zone. [5] I’d recommend not caring too much about the wall-clock time in this case. Of course, 2 ms matters, but this is also running on a fast CPU. What really stings to me is that it was significantly faster before.

TaleSpire Dev Log 279

While we work on the ‘props as creatures’ feature we talked about the other day, I’ve switched tasks to look at some bugs hitting folks in the community. To that end, the next patch will have the following:

- A fix for a memory leak in copy/paste

- Cases where exceptions should have caused TaleSpire to leave the board but didn’t.

- Fixes in the board loading code to make it more robust

- A fix to the campaign upgrader, which was having errors

The campaign upgrade issue was a regression caused by me when I changed how some internal data was structured. I should have double-checked the upgrader before pushing.

I also am tracking another regression that is causing issues with picking tiles and props when in certain positions. I’ve got a solid idea of where these issues lie, so I’m hopeful that I can get this fixed today.

Once that is done, I’ll push out a patch.

Hope you are all doing well,

Peace.