From the Burrow

TaleSpire Dev Log 329

Yesterday the code gods conspired against us, so we didn’t get to start on the latest iteration of the HeroForge mini specification.

Instead, I focused on packages.

We use a bunch of experimental packages, and naturally, these have bugs from time to time. Yesterday we had such a case with Unity.Entities. The package had already been fixed in the latest update, but that fix was incompatible with the latest version of another package with its own bug (and so on it goes).

This led to me shuffling around packages versions until I got a setup that will work for now.

The amusing part is that we will be removing the Entities package soon anyway. We currently have it because:

- Unity.Physics depends on it

- We use a couple of serialization tools that happen to live in that package.

Neither of these reasons will be valid for long, however. We are forking Unity.Physics to optimize it for TaleSpire’s specific use case. As we don’t use Unity’s ECS, we don’t need the ECS integration in the physics engine.[0]

As for Serialization, I have already started working on internal tooling for defining data formats and convertors between them. This will help in a few ways:

- It will reduce the amount of hard to maintain serialization code.

- It will make writing & upgrading binary formats more approachable to more of the team.

- It should make it easier to iterate on the formats used in mods without worrying about breaking what is already out there.

I only need the basics of this to allow us to drop Unity.Entities. That, in turn, will let us to pull the latest version of the conflicting packages and put us in a great place to improve the performance of physics.

And that, for today, is that!

Seeya you around.

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] In fact, I did this today! It’s sitting on a branch, ready to merge

TaleSpire Dev Log 328

Heya!

Mac support has been going surprisingly well. Let’s natter about it!

Progress

The first order of business was pixel-picking. This requires custom native code as we need to use features of the graphics API not exposed by Unity. I had written the DirectX version over a year ago, but this time I needed something for Metal. I haven’t written any Metal code before, but I found this article and this code sample and felt pretty confident I could bodge something useful.

This turned out to be correct. I am very lucky that, at my previous job, I suffered through so much ObjectiveC development as it made the process much easier. After a bit of experimenting, the solution turned out to match the form of the DirectX one very closely, so the API for our plugin could remain the same.

It was fantastic to see picking come to life!

As you’ll have just seen, the cursors are HUGE! It turns out that Unity will try to match the cursor size as close to the source image as possible, so I need to go adjust the import settings.

Next up, I set myself to fixing a mistake in the build scripts[0], and then I needed to look at some more platform-specific code.

In Unity, if the game is in fullscreen mode, the reported resolution of the display is that of the fullscreen game. This is handy in some cases, but not for us. We want the full pixel resolution of the monitor that the game is currently on (according to the OS). This is easy to get from the Cocoa API, and so this was another job for some native code.

With the pixel-picker under my belt, it was extremely simple to get this written.

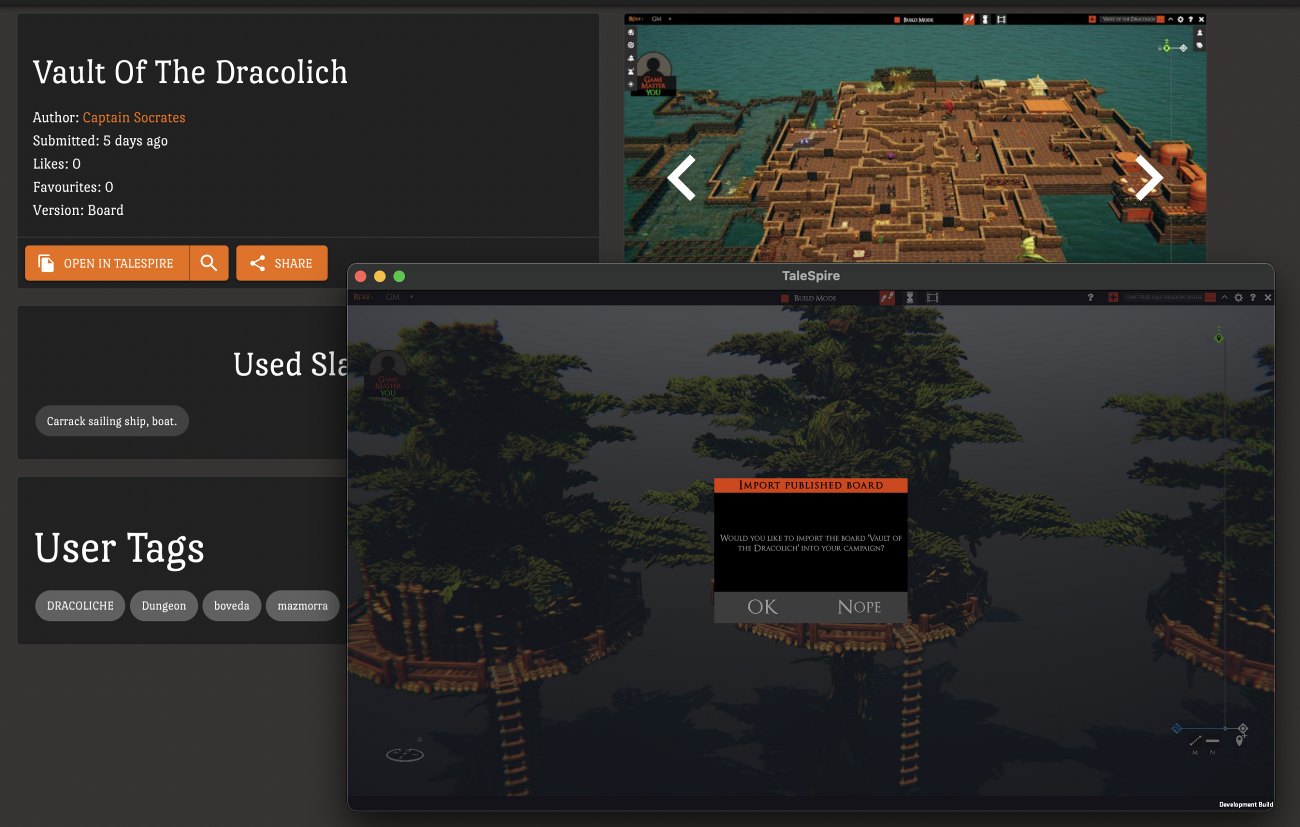

Another thing that turned out to be super easy was supporting the talespire:// URLs. Unity has a simple setting, allowing you to set up custom URL schemes. It was so easy that there is nothing else to say about it. So here’s a picture instead:

Issues

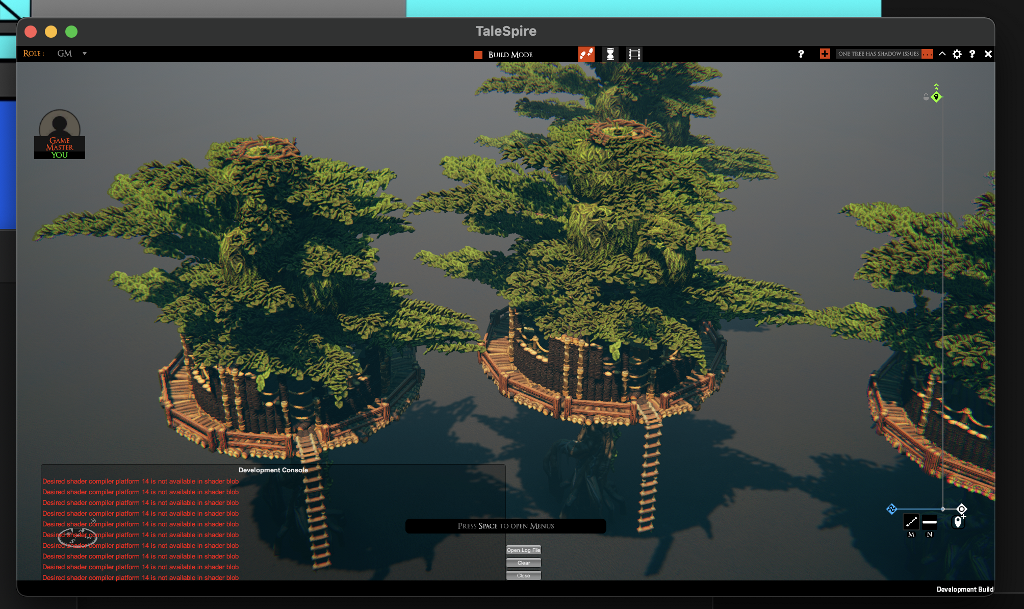

There are plenty! The big one is that lots of shaders are broken. As an example, here is water:

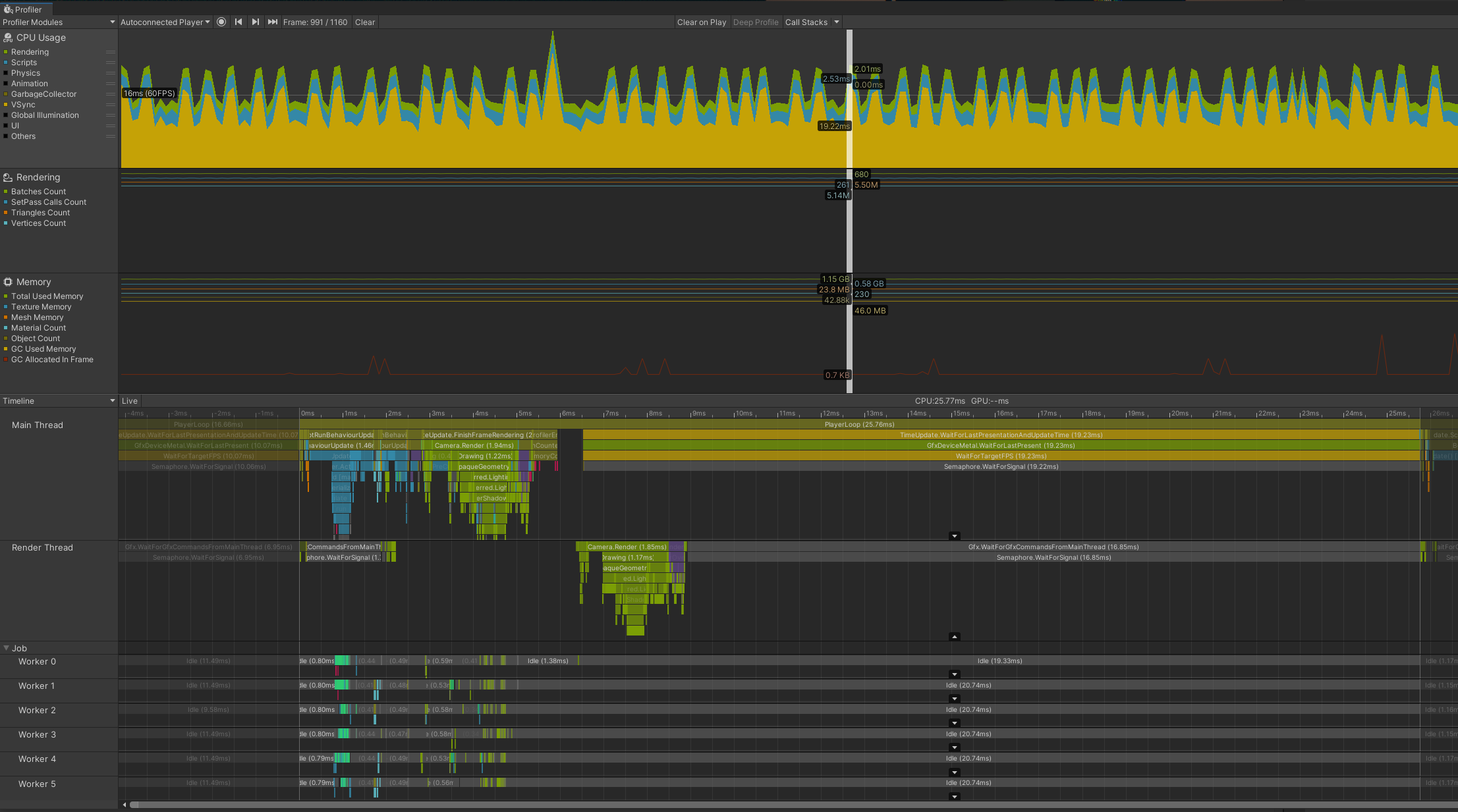

The fps is also bizarrely low. Below I have a screenshot of the profiler. You can see that the work on the main thread is done in under 6ms, and the render thread is done by ~9ms. VSync is enabled, so I expect it to wait until 16ms before starting the next frame; however, it is waiting until ~25ms. Clearly, something is wrong or is missing from the graph.

This could be anything from a two-minute fix to a two-month one. We’ll have to see!

What’s next?

Clearly, there is still plenty to do before mac support can ship, but tomorrow I need to switch back to HeroForge and modding. I am also no shader expert, so I’ll definitely have to kick this to Ree so he can work out what is breaking there.

I’m delighted with the 10Gb network gear we purchased to link my dev PC and the Mac. It has made cross-platform development so much nicer than what we have had in the past and has helped Mac support progress smoothly.

So that’s it. I left out a bunch of smaller things for the sake of time, but I hope you’re as excited as I am for where this is going.

Have a good one folks!

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] If BuildPlayerOptions.locationPathName does not end with .app, the dll containing the compiled burst jobs is not being copied to the plugins folder.

TaleSpire Dev Log 327

‘Allo folks.

Yesterday seemed to want to stop me from working on Mac support, and it won. Today I turned the tables.

Before we get into that, let’s skim through some tasks from earlier in the day.

The first order of business was handling the renewable of some server certificates. This led neatly into one of the problems from yesterday, certificate authentication callbacks failing on the latest Unity version.

It turned out that, while the HttpClientHandler.ServerCertificateCustomValidationCallback callback is now broken, the global ServicePointManager.ServerCertificateValidationCallback one still functions.

I hate this global event as it is fragile to modification by other code[0] and is called for all requests when we only need it for specific ones. We only need to check requests to the backend against our pinned certificate, so I have to filter those cases in the callback. However, at least we can continue work.

I then updated our build scripts, so we could perform mac builds. As I previously had issues building directly to the network share, I now build to a temp directory and copy the files after completion.

Next was updating platform-specific code:

- Reliably obtaining the monitor size on Windows required some calls to the win32 API. For Mac, I’m starting by assuming the Unity commands will suffice and will write a native alternative if needed

- I updated the dylib for miniz to one that supports M1

- Updated a couple of shaders

#ifdefs to include them for Metal - Disabling the pixel-picker on mac

- A whole slew of code house-keeping stuff

That was enough to get TaleSpire to start, login, and load a board. This felt huge as the last time I tried this, I couldn’t make a standalone build, steam’s API didn’t support M1 mac, and I had run a hacker server locally just to get something running.

With all of this, I am finally ready to start coding the thing I tried to start midday yesterday :D

Well… almost ready. I just need to download XCode, and I sure do hate me some XCode. That’s just the price of Mac support, I guess.

Anyhoo, that’s enough for today.

Hope you have a great weekend. Ciao.

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] because, as the docs say, “Despite being a multicast delegate, only the value returned from the last-executed event handler is considered authoritative”

TaleSpire Dev Log 326

Hey everyone.

Today has not wanted me to make progress!

I have a few working days before the new HeroForge asset changes are available, so I thought I’d look at other upcoming features. So I:

- reviewed the roadmap

- reread the server chat code

- got an overview of rulers

- came up with more emote ideas

- made some more notes for some potential gm tool for previewing what you players can see

But these are just notes. I wanted to dig into something. I did some reading around Metal (Apple’s graphics API), specifically how to read back data from textures. It looked fun, so I thought I’d dig into Mac support.

So I:

- updated the Unity version

- worked around a new bug

- pulled the latest version of Steamworks.NET

…and then promptly ran into a bug where https requests weren’t working. It’s looking like HttpClientHandler.ServerCertificateCustomValidationCallback is not working, and so certificate pinning is broken.

This is maddening, and I’ve spent a good few hours poking around code to see if another code path would work. So far, it seems not.

This means I should probably roll back to earlier Unity builds to see if they work. However, while this was going wrong, I found some server-side work requiring prompt attention. So it looks like that’s where my time is going next.

Yeah, so it was a bit of an annoying day. I was pumped to make stuff, and I ran into walls instead.

No worries, though. That’s just how it goes some days.

Have a good one.

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

TaleSpire Dev Log 325

Hey folks,

How the hell has it been a week since the last one of these?! Let’s remedy that.

AssetDb

The AssetDb is a chunk of code that handles the loading of assets and indexes. It keeps track of metadata such as tags and groups and provides hooks for other systems.

Up until now, we haven’t needed to unload packages at runtime. With modding, this all changes, so I’ve been adding that functionality.

There were a few false starts and dead-ends as I tried to find a balance between simplicity and speed. I’m still trying to learn the skill of choosing the approach that is just dumb enough to work while still being amenable to development over time. Getting stuff working faster and iterating is so valuable.

Asset code obviously touches many things in TaleSpire, so you find yourself in bits of the codebase that are quite old (like the inventory bar at the bottom). Once again, we don’t want to spend too much effort on these things as we already have proper improvements planned for the future. So I find myself holding off any smart fix that would be too invasive.

All that rambling aside, it’s going well. Unloading is working great. Next up will be testing and fixing systems that don’t know how to react to assets becoming unavailable dynamically.

Fix tesla-coil & blue-fire

We have had an intermittent bug with tesla-coils occasionally not showing their lightning for a long time now. It’s always been tricky to track down as I didn’t have a board that could reliably reproduce the issue. Every time I would test, the damn thing would work, so the ticket would keep falling further back in the bug list.

However, last week the aberration pack shipped, and we had reports of the blue fire asset not working for some too. Ree quickly managed to track down one issue[0], but the fire behaved suspiciously like the tesla-coils even with that patched.

Unlike previous efforts, this time, we got lucky. One of our moderators had a board where they could semi-reliably get the tesla-coil bug. She published it, passed me the link, and we were off!

I was able to find out the following:

- If I imported the board and then placed a coil, it would fail

- If I reloaded the board, the previously placed coil and all new ones would work

This is precisely the kind of place I love to start, and I quickly tried to delete parts of the board until I could get the smallest number of tiles that triggered the issue. This can yield a minimal test case, but this time it was very stubborn. If I removed any significant chunk of the board, the tesla coils just started working again.

That in itself is data, of course, so I changed tactic and started stepping through the batching code in the debugger.

I should take a tiny diversion to explain that the commonality between the coils and fire is that they are both ‘multi-mesh’ animations. To get the stop-motion-like effect of the flames, the spaghet script that runs the fire is swapping out the active mesh over time[1]. I quickly confirmed that the multiple meshes existed but that the batching code didn’t think any of them was current due to an incorrect index.

It’s a weird one to explain, but in short, the index should have been an int, but instead, it was a byte.

The index was into our global collection of meshes[2], and this meant that if the index was <256, the mesh would work. If not, it wouldn’t.

It worked when loading a board that already has the tesla-coil as (purely by chance) it was one of the first 255 meshes added to the global collection.

It didn’t work when importing the mod’s board and THEN adding the tesla-coil because that board had a lot of stuff loaded already, and so the index of the tesla-coil’s mesh ended up being >255

This also explains why I couldn’t reduce the board easily. It wasn’t the number of assets in the board that mattered, but the variety. When I tried to trim the board to make it smaller, I was removing just enough kinds of assets that the tesla mesh would end up with a valid index again.

Looking at our git history, I think I was going to use a local mesh index at first (and so chose byte), but partway through, I switched to indexing the global pool, evidently without updating the type from byte to int.

So! Now to ship it. I’ve checked out the TaleSpire code from before all the HeroForge work, and I have cherrypicked the fix commits over. I hope to ship these fixes tomorrow. It’s tempting to rush it out tonight, but I’d rather have an extra pair of eyes on it first.

Build-Id

Until now, we tried to include the Steam build-id in the UI to help with bug reports. However, the steam command to get the build-id is super weird:

int GetAppBuildId();

Gets the buildid of this app, may change at any time based on backend updates to the game.

Returns: int The current Build Id of this App. Defaults to 0 if you’re not running a build downloaded from Steam.

Did you spot it? It returns the latest build-id on Steam, not the build-id of what you have installed!

Why? I don’t know. But I finally got annoyed enough to fix the problem.

I’ve modified our build scripts to use git to get the short hash of the current TaleSpire commit and include it as a const string in the c# code.

It’s dead simple and makes it trivial to ensure we are testing the build the players were playing.

For you lovely folks out there who are dll hacking and making unofficial mods, we append an extra _M to the end of the build-id when the AppStateManager.UsingCodeInjection flag is set.

This flag has been hugely helpful for helping sort bugs reports on the server side, so thank you so much for setting it. By including it in the build-id, we can speed up triaging some of the bug reports we’ve seen so far that have been mod-related.

In house tooling

Today I picked up a task that has been on my todo list for ages. Improve in-editor tools for working with tiles and props.

Tiles and props in TaleSpire are not made with Unity’s GameObjects. We made enormous performance improvements by creating a custom approach [3], but in doing so, we have lost the fantastic tooling we got trivially with GameObjects.

To improve this situation, I wrote a custom inspector that uses our pixel-picking code to display a bunch of our internal asset data. It’s ugly, but it’s already proving useful.

As I was apparently in the mood to work on stuff that had been waiting forever, I decided to optimize the physics debug drawing code. Until now, we used Unity’s Handles API, and it is slow. Slow to the point that I can’t visualize physics info for large boards.

Luckily the Debug.DrawLine method was updated to work from Burst compiled jobs, so I decided to rewrite Handles to use Debug.DrawLine instead.

This only took an hour or two, and the result was a HUGE boost in performance. However, there is a cap on the number of lines that can be drawn per frame, so my next step will be to replace Debug.DrawLine with my own approach. However, for now, this is great.

With that done, I tweaked the new inspector to enable visualizing the physics data for the selected asset. This used to be pretty tedious to debug, and now it’s two clicks :)

HeroForge

The AssetDb work was required for HeroForge as well as modding, so we’ve covered the bulk of the news. However, the HeroForge team has really gone to town packing extra data into their models so that we can hopefully support ‘Dark Magic’ as well as a bunch of other things.

As just one example, they now include metadata about their emissive textures, which helps us process them much faster in some cases. This is all stuff they didn’t have to do, so we’re super grateful!

And that’s the lot

It’s been a busy time, and it is not slowing down. The next asset pack is well underway, the next patch is ready for testing, and I’ll start integrating all the new stuff from HeroForge tomorrow.

Until next time. Stay safe.

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] A missing texture causing odd behavior on some GPUs

[1] We also use standard vertex animations.

[2] This is an approximation, but it will do.

[3] For a reminder of how slow it was, even with lots of caching and work, check out the video in this dev log: https://bouncyrock.com/news/articles/talespire-dev-log-202

TaleSpire Dev Log 324

Hi folks!

The HeroForge work is rapidly solidifying. We have had some testers playing with it and finding plenty of bugs, but nothing looks too scary. We have been able to try out some more normal creations…

…and some that are a little more out there.

In recent days I’ve been working on:

- Support for multiple creatures on a single base

- A mistake in mipmap generation

- A Linux (via Proton) specific bug due to how I was calling some WinAPI functions for random data

- Issues with miniature placement

- A line-of-sight bug which arose because of the changes needed to the creature code.

- Better use of head/hand/etc positions from model, and sensible fallback values;

Handling scale

The eagle-eyed among you may have noticed some large creatures with surprisingly small bases. Until now, the base size has matched the creature size (0.5x0.5 -> 4x4). After some discussions with HeroForge, we decided that the scale of the base relative to the creature should match the HeroForge editor as closely as possible.

One problem that immediately arose was that you can’t just use the base size as the default scale. Otherwise, this chap

who is a 1x1 creature can be scaled up to 4x4, which gives you this

Which is unplayable[0].

This meant decoupling base size and creature size. We use the total size of the mesh to pick a sensible default size and let the base be rescaled to keep the proportions required. This keeps things looking good while also being playable[1]

Today

So far today, I’ve hooked up the emissive map, which is where HeroForge bakes its lights[2].

Note: As you can see there is still work to do on the strength of the emissive

Note: As you can see there is still work to do on the strength of the emissive

It seems that the emissive map is included even if it is empty, so I am updating the asset conversion process to check for this. We can then exclude blank maps, saving some GPU memory in the process.

I am then back on bugs and loose ends. I have a lot of ‘todos’ in the code where I need to handle failure cases and decide how to relay them to the user. This will be fine, but it takes a little time. All of these cases will be relevant for the creature modding too.

I won’t finish all the fixes today naturally, but it’s all looking very positive. Getting a mini from HeroForge into TaleSpire takes about ten seconds, depending on download time (mine is pretty slow).

Until next time!

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

[0] I’m trying not to be hyperbolic here. I tried playing with it, and it was utterly useless at those scales.

[1] Even today, scale can be tricky in TaleSpire. I definitely don’t want to claim that our approach with HeroForge is ideal, but this feels in line with the current experience of the built-in assets.

[2] Except for the “dark magic” lights. We need to work out how/if we can support those.

TaleSpire Dev Log 323

Disclaimer: This DevLog is from the perspective of one developer. It doesn’t reflect everything going on with the team

Hey everyone,

Last week was quite a doozy. I didn’t mention it at the time, but I was in the process of trying (and failing) to buy a house, so lots of extra distractions.

Photon issues

A few of you also got bitten by a Photon issue earlier in the week so let’s talk about that first.

We’ll kick off with a bit of background. Photon is a service we use for handling real-time network communication in TaleSpire. By adding their SDK to your game (which we will call “the client”) can create “rooms.” Other clients can then join the same room. When multiple clients are in the same room, they can send data to each other. The data can be transmitted reliably (via RPC) or unreliably[0]. Photon also has a lot of functionality to help with managing networked GameObjects to trivially keep them in sync. When a room is closed, no information persists.

Photon operates servers worldwide so that the time to send messages between clients is minimized. Running such a service is a huge undertaking, and even the pros struggle with it. Without something like Photon, TaleSpire wouldn’t have happened as we would not have been able to make something similar in the time our funds allowed.

We do have our own backend and data message service, but it is not designed to handle real-time traffic. Instead, we focus on what you can’t do with Photon: communication between rooms and persisting data.

Right, that’s a lot of background, but hopefully, Ι got across that this service is valuable and is not trivially replaced[1]. But when Photon has issues, we feel it.

That was what happened earlier this week. For about an hour, some people whose campaigns are hosted in the EU were having issues connecting. We were confused as Photon’s status page was not showing a problem. We have confirmed with other developers using Photon that the event occurred and are starting a conversation with Photon’s support to see what we can learn.

It’s somewhat disconcerting to see such issues after Photon being solid for so long, but we aren’t keen to throw out the baby with the bathwater, so we take these things slowly.

Voice chat

As we are talking about network stuff, let’s ramble about voice chat.

Voice chat is an interesting challenge. In principle, we can just find a suitable[3] webrtc client and hook it up. Of course, hooking it up is where it gets fun.

WebRTC is principally p2p. Setting up the connection requires exchanging some messages, so there does need to be a way to do that. Of course, we have our backend, so we can use that to handle the setup (of course, then it’s not pure p2p, but the call will be).

Next, you have NAT travelsal. Basically, it is not always possible, so sometimes (let’s say 15% of the time), you can’t have a p2p connection. What to do? Well, WebRTC allows for relays that the problematic client can connect to in those cases. Of course, these relays have to be hosted somewhere (not p2p), and that costs money. You can certainly find people who host free ones (google does this), but as you can imagine, you need to trust them.

And we are far from done. Let’s talk traffic. We will assume that we have five clients connected, and that all managed to connect peer-to-peer. That means that each person has to send their voice and video data to all four other machines. More players? More overhead. And all of those clients also have to receive data from every other client too.

That traffic adds up real fast! So being ingenious, you decide to change the approach. How about we have some central machine that all the clients send their voice and video to. That machine then has a stream for each player that you can connect to. So if there are ten clients, each client is uploading one stream and receiving nine. That is a significant improvement over the nine outbound and nine inbound approach when communicating p2p. That does mean we just lost p2p. Worse, we now need servers around the world for relaying this information.

Ten media streams per client are still quite a lot. Instead, you could have the previously proposed central server take all the media streams, combine them into a single stream, and make that available to the clients. Now each client only needs two streams, one inbound and one outbound. However, now the servers behind the scenes aren’t just relaying data; they are re-encoding audio and video, which is much more resource-intensive. So the cost of your backend just went up a lot!

The above is a clumsy, simplified view of some of the problems in adding voice and video chat. However, these are precisely the kinds of tradeoffs discord, zoom, and others make. It is not viable to hand-wave these problems away if you are selling a product. Your customers will care.

And so we go back to TaleSpire. We need voice and video chat. And we are going to use WebRTC to provide p2p calls. However, this will take time. Unity also has a service for voice chat called Vivox. I will look into this as a means to bring voice chat to TaleSpire faster without us having to provide extra infrastructure.

I don’t yet know if it will be a good fit, but it is definitely worth a look. It could even be that we use it as the default for those who don’t want to trade off the overhead for the additional security of p2p.

HeroForge integration

In the last dev-log, I talked about how Ι had the core HeroForge functionality working, but also that I had written some of it quickly rather than robustly so we could start testing other parts of the system.

My job since then has been rewriting to make things solid. The short version of the problem is that we have code that:

- queries which miniatures we are meant to have

- downloads miniatures

- converts miniatures

- caches miniature data

- deletes miniatures that are no longer needed from the cache

All of these processes are asynchronous, so things can get fun when operations overlap.

For example, let’s say that someone links a miniature to their campaign, and then when trying to link a different mini, accidentally unlinks the first one, realizes their mistake, and links it again. Let us also say they have a good internet connection and that by the time they correct their error, the miniature data has been downloaded and that the conversion has begun (this is a realistic situation).

Now! They unlinked, so we should delete the mini from the cache, but it’s not there yet as it’s still converting. So we can try cancel the conversion process, but it’s not guaranteed that the message will be processed before the other thread has finished. Instead, let us assume we mark somewhere to delete the mini when it hits the cache.

We can also recall they have now relinked while this was happening. So we do need the mini. So if we delete it, we need to download it and convert it again. And if we do try and download it, we need to make sure we download it to a different folder as deleting the local data takes time too.

I’m belaboring the point a bit, but Ι hope it’s clear that by assuming different timings of user input, downloads, and processing, we can end up with various different situations to handle. [4]

After a good few flow diagrams and pacing around my room, we have something that behaves reliably. Ι can’t think of a way to describe it without making this post much longer. The short version is that there is a strict hierarchy between the HeroForge account handling code, the cache manager, and the download manager. The cache also needs to handle the possibility of multiple versions of the same mini being in flight at once (rare case but needs handling).

Caches and running multiple copies of TaleSpire

Now I hear you calling out, “caching is just so fun, tell us more!” and to that Ι say, get help. But also… sure.

Some of you out there run multiple copies of TaleSpire on the same machine. It’s a relatively uncommon case, but it can be helpful for streamers or TV play.

The problem is that TaleSpire caches files (parts of boards, HeroForge minis, etc.) in a specific directory. If we have two copies of TaleSpire running, both are trying to write to those folders simultaneously. Worse, they might try and delete the same stuff too.

So far, we have ignored the problem as conflicts were rare, and the use case was niche. However, these problems will become mainstream with the upcoming package manager.

To handle this, we have to detect whether a cache is in use and, in such a case, make a new cache (or reuse an old one). And we have to do this in a cross-platform fashion.

This is really a variant of the “detect whether my program is already running problem,” so let’s look into it.

We need some construct to signal that a resource is taken across processes. Another wrinkle in the problem is that this not only needs to be cross-platform but ideally needs to be supported in MS and Mono’s DotNet implementations.

If this were an interthread problem, we would use a mutex, but your traditional mutex doesn’t communicate across processes. Windows does have the named mutex, which might work, but isn’t supported on ‘Nix[5]. Linux does support named semaphores, so there might be a route there.

Windows also has file locking, and while Unix OS’ don’t tend to (afaik), they usually have advisory locking. This soft construct doesn’t prohibit overwriting but instead indicates that you shouldn’t. As we can query this information for a given file, we can use this as our communication construct.[6]

With this tool in hand, Ι made a system that does the following on TaleSpire start:

- tries to acquire the primary cache

- if it succeeds, it also tries to clean up any old caches which are not in use[7]

- if it fails, it looks to see if there is an older temporary cache it can claim else, it makes a new one

Thanks to the locking, we can trust that multiple clients can’t claim the same cache simultaneously.

Wrap up

That’s most of the news from me Ι think. Naturally, everyone else in the team has plenty going on too, but this log is long enough :)

Hope you are all doing well,

Until next time!

[0] If you are unused to network programming, you might be wondering why unreliable messages would be desirable. The reason is that for many cases, the latest values are all you care about, and older values are nearly useless. Player position is often a good example of this.

Making messages reliable takes a lot of work and sleight of hand performed either by your program or the network protocol. This adds a lot of overhead that you simply can’t afford in most cases in games.

This is a big subject, and I’m butchering it in this little comment, but hopefully, this helps a little.

[1] peer-to-peer is its own challenge, and it has plenty that makes it significantly trickier than the client-server approach. We will tackle it in the future, but it’s not something I expect to go smoothly :D

[2] And still isn’t, which is annoying

[3] It’s got to work and not be a resource hog.

[4] I’ve also left out a bunch of fun details such as file locking and OS/filesystem-specific considerations

[5] We are using Proton on Linux for the foreseeable future, so that case should be easy, but we still need a native client for Mac

[6] This approach is hairy but commonly used.

[7] It leaves a few around as the small cost in disk space is worth it to allow multiple clients to work faster. There would only be multiple caches if there was a time when multiple copies of TS were open at the same time.

TaleSpire Dev Log 321

Disclaimer: This DevLog is from the perspective of one developer. So it doesn’t reflect everything going on with the team

The weekend was very successful. Ree made significant progress with the UI for the HeroForge integrations, and I worked on the implementation.

I started by fixing an issue causing minis not to be positioned correctly relative to the bases. Next up was scaling. I surveyed our current minis to find reasonable thresholds for picking different ‘default scale’ values for the miniatures. I need to test more minis with this. It will not be perfect, but it should get us started.

Next, I wrote the client-side code that handles unlinking minis from a campaign. Technically it works, but I noticed potential race conditions between asset conversion and cache clearing. This is obviously unsuitable, so I’m writing a manager to mediate access to the cache.

Usually, today would be a workday, but as I worked all Saturday and Sunday, I will take today as a break and get back into this tomorrow morning.

Have a good one folks!

TaleSpire Dev Log 321

Hi again,

As expected, today has been about HeroForge. I’ve added the manager that handles pulling down thumbnails and have been reworking parts of the conversion process.

Hmm, it’s very annoying how little text my day compressed down to :D

I’ve now merged our HeroForge branch into master. This is great as we are forced to deal with all the broken things. My plan for today is to help out with the UI where I can and otherwise to find and fix bugs.

The first order of business is definitely fixing some incorrect texture formats we are picking on load.

I’ll keep you posted,

Peace.

TaleSpire Dev Log 320

Good-evening all,

I am exhausted right now but didn’t want to leave another day without a dev log, so here is a tiny one.

I’ve continued work on trying to make some support tooling. It’s clearly one of those less fun things that my brain doesn’t want to do, so it’s been a bit of an uphill struggle.

I’m over at Ree’s for a few days now, and tomorrow we dive into HeroForge stuff again. I’m very excited about this and will let you know how it goes.

Right, I’m off to bed.

Seeya folks