From the Burrow

TaleSpire Dev Daily 8

Todays daily is short.

FAILURE!

So far today I have failed to make a fill implementation I’m happy with. I know the exact approach I’m going for an have made some progress on the steps after extracting the reachable region, but the damn fill has been eluding me.

In the last few minutes I’ve started another way which, whilst will require a bit more bouncing around the tree, is a much simpler implementation.

Hopefully tomorrow it will be working and I can yak about that. Until then it’s just a day with a bunch of failures. No worries of course, failures small and large are pretty much a constant when coding new things so its just a sign of doing things :)

Goodnight

TaleSpire Dev Daily 7

Here we are again. This time I don’t have much to show as I spent most of the day at the whiteboard. So instead I’ll talk about the problem I’ve been mulling over.

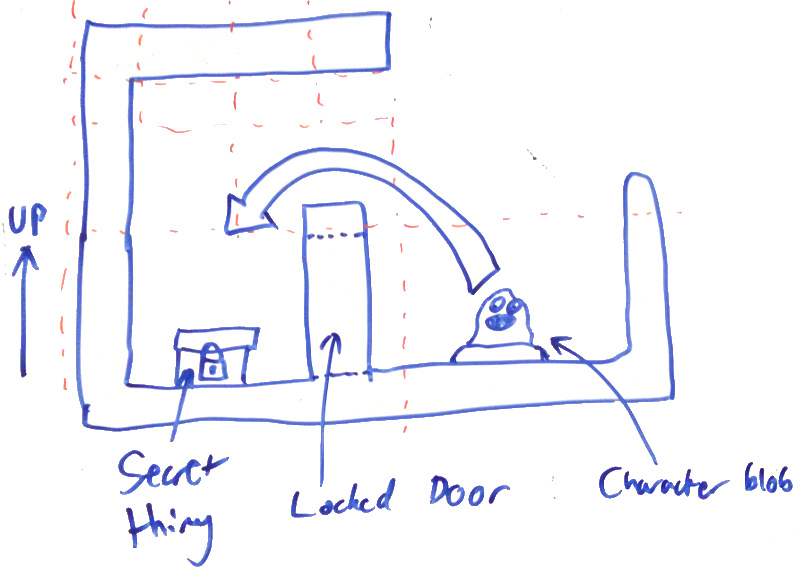

When a character piece (henceforth just called a ‘character’) is placed on a tile we want to show every tile it could possibly reach, regardless of distance. We dont want to show things behind walls or locked doors. Also we need to do this quickly.

Actually let’s pause to clarify something. It is going to be tempting to redefine the problem given the issues that we are about to look at. You may not even like the idea of the behavior and want to change it for that reason. Please, for now, trust that we have tested this in game and it feels nice, so for now this is the problem we are trying to solve.

Alright, back to the snooker..

Before the game had floors it was effectively 2d, the approach then was to flood fill, performing raycast to see if there was an occluder (a wall) that would stop the progression. It might be rather wasteful but it worked well enough for a demo and you can get away with a lot of raycasts per frame when your game is as simple (in terms of how much stuff is going on) as ours.

However now we add floors and everything changes, now we have a third dimension to reckon with and everything gets much more expensive. One other interesting detail is although we have separate floors we also want the users to be able to make features that reach through to other floors. For example imagine a grand hall with a obsidian pyramid in the center, we want to show the whole pyramid from the ground floor even though it is several floors high as this gives the moment gravitas that would be lost if it you only saw a tenth of it.

One basic thing we did in the 2d version and will still do in the 3d version is divide the world up into zones. A zone is a 3d region of a certain number of tiles in the X and Z dimensions and some height (lets say 10 floors worth) from a given floor.

With this known we specify the subproblem as: Find every reachable place in the zone and which zones we can access from this zone.

Also it’s worth noting that the player will be moving the character frequently so the result should be cachable or very cheap to recompute.

In my mind we need to know what is solid and what is empty space. Let’s say the zone was 81x81 tiles in size and just as high as it is wide. The tiles are 1 Unity unit in size so that’s 81x81x81 units, assuming we only need to know if a unit cube is solid or not then a dumb solution would require us to store 531441 booleans (true or false) so say whether each part of the zone is solid or empty. Clearly for large boards this is untenable.

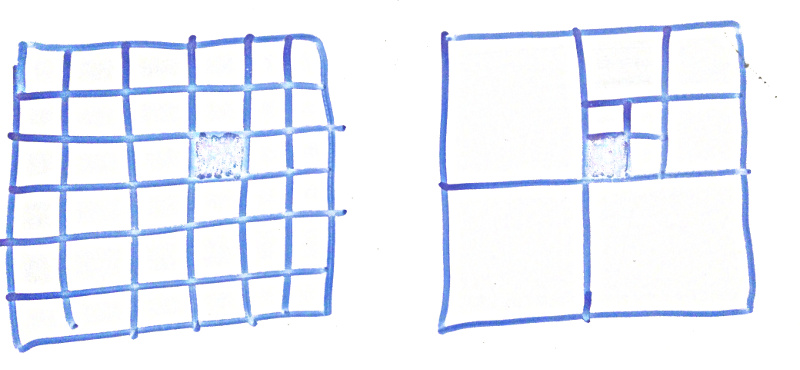

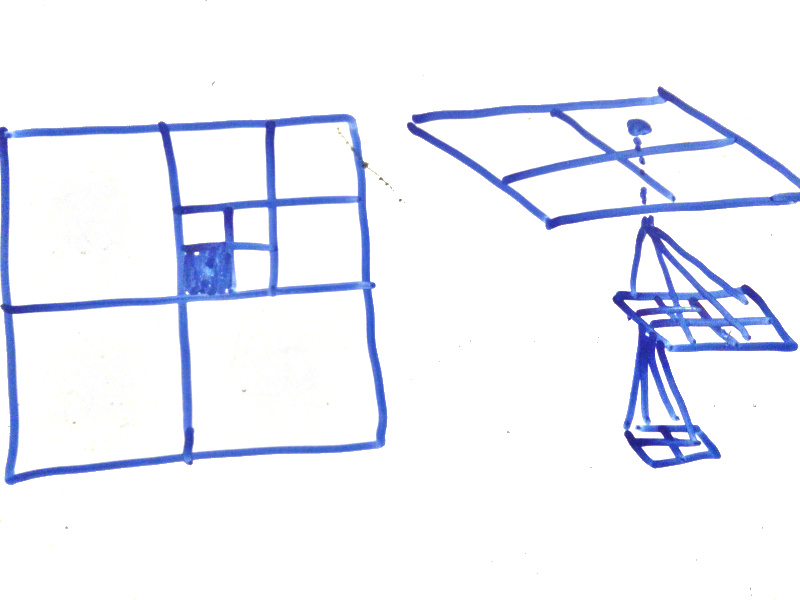

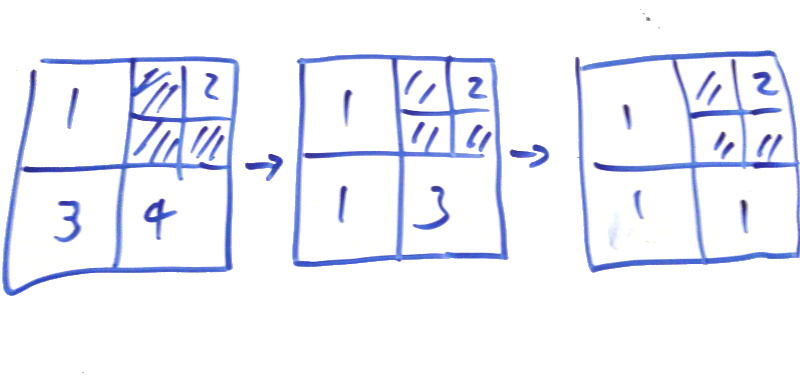

One common and reliable way to store 3d spatial data more efficiently is using an octree. It recursively subdivides space into 8 equal subspaces down to the required resolution. So (for a 2d example), rather than having the 2d array on the left with 36 slots we have the approach on the right with 10 slots as we don’t subdivide if there is no new information inside.

yup it’s not a totally accurate drawing but I hope ya get the idea

Each node in the quadtree has, either a marker saying it’s solid/empty or has 4 child nodes.

For octrees it’s the same deal but with 8 nodes and 3 dimensions.

So, now we have our data-structure we can fill it with information about what is solid and what isn’t. Then we could so something rather similar to the old flood fill, only this time we start from the character position, find what region in the octree they are in and walk to the neighboring regions.

Most of the time we move somewhere it’s going to be one of the places we have already searched so we can cache the result of the previous walk. We can also cache the octree itself.

So far so good, but there are issues:

a. Our 3d search can search over walls we need to limit that but that will limit their b. Not having to search to work out what parts of the quadtree/octree are accessible would be cool. They have that built in recursive structure so can we leverage that? c. Tiles are place on the floor itself or on other tiles, can we propagate information between them on creation that could help us? could we serialize it when saving the level so we dont have to recompute on load?

b gets rather tantalizing. If we could asign each node in the tree an integer id, we could then take the min of the neighbours and it would resolve to all nodes having the same id if they are reachable. The resolve takes time though, could we find a way to do this in 1 (or some fixed low number) of passes? Is it worth it?

Unrelated but important; if you want to be fast then cache locality matters. Could we store the data in a way that helps our intended access patterns (searching)? One example could be using a Z-order curve to linearize the data and using something like a skiplist to keep the data sparse.. again would this actually help? It all depends on our data and how we access it.

I’ve been asking a lot of questions as it frames the issue nicely, but I do have some WIP answers but explaining them will balloon this post even more so for now I’ll just say I hope to have something I can show by the end of Sunday. I’d also like to stress that these problems arent novel, there is a lot of literature and most games will be dealing with much more interesting cases than this.. still it’s fun to think about.

Until tomorrow, Ciao

TaleSpire Dev Daily 6

It was a slow day today. I carried on working on the fog-of-war system which is what works out where in a board a given character could get to. It’s being rewritten as the version we have demoed so far did not handle multiple floors.

The behavior needed is that, when a character is placed, everywhere that is accessible in the board should be visible. To do this we are splitting up the (occupied parts of the) board into zones and in each zone we have a structure that describes where is solid. This makes it cheaper to search the zone when ascertaining what you can see, as we don’t need to hammer Unity’s collision system all the time.

This fiddly thing is we want it to be fast and so I’m trying to balance cache locality of data with wanting a pretty sparse data-structure. I had wanted to make use of Unity’s new job system and there you need decent size chunks of ‘flat’ data. For that reason I had wanted to avoid octrees and look at simpler bucketing instead. My current approach is pretty crappy but I really need to get something working so I can start measuring.

The good part is that I added the Zones class and got the low res collision info from yesterday written into the Zone. Tomorrow I will try and not think about how bad performance will be and just write the search.. or if I cant then I will take the search back to the whiteboard :)

Until then, seeya

TaleSpire Dev Daily 5

Hey all,

The planning weekend went really well. We whiteboarded out the new visibility system and the code that handles where is walkable. The will no doubt be changes to the design but we can see how that goes this week. We also ran through the core user journey and planned out the UI, data requires and systems that still need making. Whilst I dont have specifics for you right now I am even more confident that you folks will have some form of early access in your hands this year.

I started the steam login integration. Thanks to steamworks.net the unity side was easy and the server side was just basically a get request so no issues there.

I do want to clarify that Steam will not be the only way to log in. We don’t want to force people into a specific platform so we will have a few options when the release comes out. It may be what we use for the alpha though, we shall see.

Right, I’m tired so I’m going to stop coding now and chill.

Peace

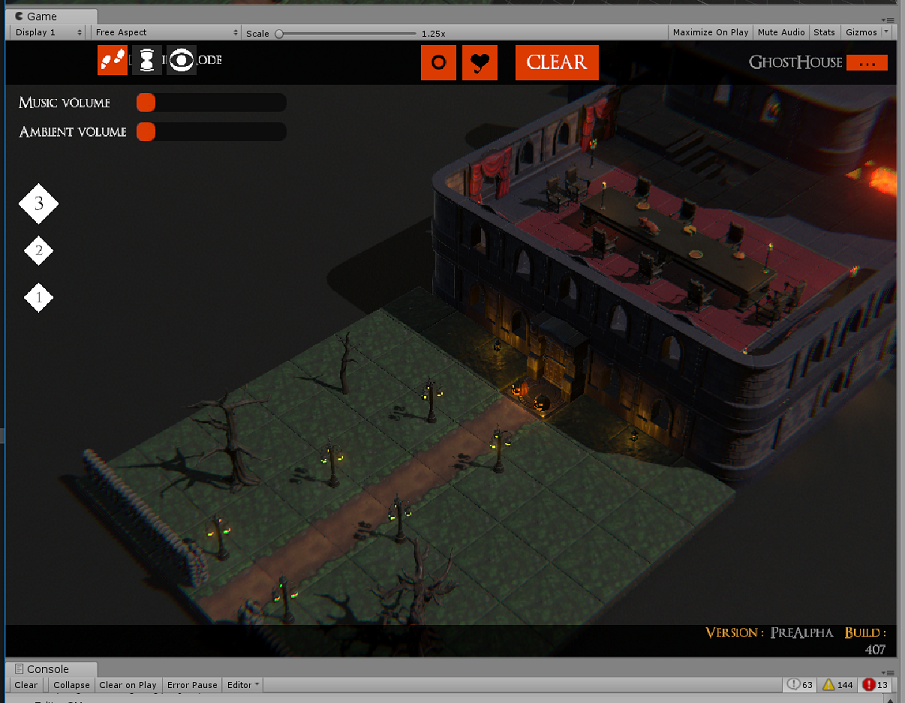

p.s On the left is a test of some generated lower res information to be used in the visibility system.

TaleSpire Dev Daily 4

Today I put aside the undo/redo work and focused on fixing little bugs instead. As I’m off to @jonnyree’s place this weekend I really wanted to get the recent stuff as stable as possible so we can merge it to master before I dive into another chunk of the project.

That mainly involved fixing some bugs around network IDs, a dumb mistake in serialization, and a bunch of little cleanups.

Hmm, there’s not really much else to say. Ah well, there are plenty of days like this.

Seeya folks, back on Monday with the new plans

Peace

TaleSpire Dev Daily 3

Allo again,

Today wasn’t the most satisfying. I wanted to prototype the undo/redo scheme I had doodled out but some of how floors was implemented was making it difficult. Even though we are redesigning the floor system this weekend it was more work to work around the issues than just tweak them so, after a couple of hours reaching that realization, I spent a good chunk of the day yak shaving.

With that somewhat out of the way I had a go at implementing the scheme. I already has working local undo/redo, so the task was making it work with multiple people editing simultaneously.

One example issue (which I mentioned yesterday) can be summarized like this:

There are two GMs,

A&Band each have their own undo/redo history

- GM

Aplaces a floor-tile- GM

Aplaces a crate- GM

Bdeletes the crate- GM

Apresses undo

One possibility is then when B deletes the crate, the history event for placing the crate in As history could be removed. This way A can’t attempt to undo or redo something that has gone.

I’m a bit adverse to deleting stuff from the history however as you lose data. Instead I wanted to try putting a flag on the history event that inhibits it. When A hits ‘undo’ it will skip over the inhibited event and do the one before it. The nice side effect is that if B was to undo the delete, we can uninhibit the history event in As history again, allowing undo and redo to proceed as before.

The idea has edge cases though and, whilst I have seen a few of them, I’m not going to bog this post down with them yet. I’ll do some more experimentation and then report back to you all :).

As for tomorrow I think I’m going to leave this on a branch and get back to a few other bugs that are more likely to be an issue when working this weekend. There is one rather nasty one regarding level loading an reuse of supposedly unique ids :)

Until then,

Peace

TaleSpire Dev Daily 2

Today I refactored how we spawn and sync multiple tiles of the same kind which is used when dragging out tiles when in build mode. The new implementation uses less memory to synchronize but more importantly has a single entry in the undo/redo history so you can undo a slab of tiles in one go. In the process of doing this I also simplified one way of sending messages which means there is less boilerplate code to write (less code less bugs).

The last third of the day was mostly spent doodling on the whiteboard to work out a nice way of handling how edits made by multi game masters can cause conflicts in the undo/redo history.

A simple is case is as follows.

There are two GMs, A & B and each have their own undo/redo history[0]

- GM

Aplaces a floor-tile - GM

Aplaces a crate - GM

Bdeletes the crate - GM

Apresses undo

What should happen?

If B hadn’t delete the crate then A’s undo would have removed the crate. But the crate is already gone.

If we do nothing but move back in the history then A will be confused. A successful input without an output makes the user feel like either they did something wrong or the system is broken.

It feels like the sane thing is that if nothing can be done we skip to the previous entry. But then what do we do with redos?

Also if GM A dragged out 20 tiles and B deletes just one, what is the correct behavior. I think I’d expect the rest of the tiles to be removed. So then does redo restore them all?

I think I have a solution for this but I only got it partially finished today. I’ll get back into this tomorrow and see how it feels.

If that doesn’t feel nice then I am considering briefly showing a ‘ghost’ of the deleted object so you at least get the indication that something happened.

Right, time to get some food and get ready for the lisp stream in a few hours.

Ciao.

[0] Separate undo/redo is pretty important to avoid people undoing each other’s work, this rapidly escalates to bloodshed.

Boring Caveats

These are just my daily notes as I work on TaleSpire. It’s so cool that people are interested in the game and it’s way more fun to share this stuff that just keep it away, however nothing said here should be taken as any kind of promise that a thing will exist in a given release or work in the way stated. Stuff changes constantly and I’m wrong about at least 1000 things a day so whatever I’m stoked about today may well be tomorrows nightmare.

So yeah, that’s that. Back to the code :)

TaleSpire Dev Daily 1

Alright, another day down.

Today was primarily spent adding the undo/redo system which is looking pretty good now. A few bugs to iron out but nothing that looks terrifying.

As you’d probably imagine it’s a simple list of history actions and an index of where in the history you currently are. Each event has Undo and Redo methods and some serialized state for the thing that was being added/removed/etc. This a dirt simple approach and can get pretty unwieldy for systems with many more kinds of actions but for our little thing it’s just fine.

During making this I noticed that the board synchronization code was being too conservative about when it could send the board so now it gets sent much sooner after loading from disk. The basic run down goes something like this:

Tiles load their assets (visuals and scripts) asynchronously so it can be a relatively long time (a few frames) after the tile is created before the Lua scripts inside are running. Because of this, when deserializing we cache the state intended for this asset until the Lua scripts are fully set up.

When loading levels we obviously want to send the full state to the other players so we waited for all the assets to be full loaded before sending the level to other players. This was pointless as a give tiles can only be in 3 states regarding initialization.

- it’s fully set up and running

- it’s been initialized, has some cached state, and is still loading

- it’s been initialized, has no cached state, and is still loading (like when you first place it)

In all of these cases we can serialize as, even if it hasn’t finished loading, we have the pending state or we know it’s going to have default state.

We also could just sync the level data from the local file and append the additional setup data (character network ids etc), and we may go that way, however for now the runtime cost of load-then-sync is so low currently that it’s not worth it.

Tomorrow I will be refactoring dragging out tiles. Simple stuff but it needs some attention so it plays nicer with sync and undo.

Seeya tomorrow!

Boring Caveats

These are just my daily notes as I work on TaleSpire. It’s so cool that people are interested in the game and it’s way more fun to share this stuff that just keep it away, however nothing said here should be taken as any kind of promise that a thing will exist in a given release or work in the way stated. Stuff changes constantly and I’m wrong about at least 1000 things a day so whatever I’m stoked about today may well be tomorrows nightmare.

So yeah, that’s that. Back to the code :)

TaleSpire Dev Thang 0

Evening all! I really need to start doing my daily signoffs somewhere people can see.. so here we are!

Most of my work these days is rewriting systems from the prototype you may have seen on the streams. As you can imagine there are lots of things that were hacked in to see how they would feel but were never fleshed out. Today I was working on the sync of state from scriptable tiles. Currently only the state-machine script we are using for the doors and chests is using this change but it’s now easy for us to expand on.

I also finally let my partner try out the building system (see it’s not just you folks who haven’t been let in :D) and have a big ol’ list of things to change. Many are things we knew about but it’s always good to get fresh eyes on it.

This weekend I’m off to @jonnyree’s place so we can plan out the rewrite of the floor and fog-of-war systems so expect news on that next week.

Tomorrow I think I’m going to look at basic undo-redo and see what feels nice when you have multiple people editting the board at the same time.

That’s it for now.

Peace

p.s

Notes to Self Part N: SSL Checker

I will get back to blogging at some point, I kinda miss it.

However today I just need to note that this guy is super handy for checking that a site’s certificates are set up properly.

https://www.sslshopper.com/ssl-checker.html

At least it’s super useful for a noob like me.