From the Burrow

Windows emacs setup

My mate was looking at using emacs on windows and so I wrote a little ‘how to’. I then added my own opinions of stuff to start with so it got bigger. Made sense to just dump it here:

Note

I’m probably out of date! I just saw you can install emacs via pacman in msys which might handle a bunch of the ‘path’ & environment variable shit for you. I’m going to look into this and then update this guide.

Install

- download this chap http://ftp.gnu.org/gnu/emacs/windows/emacs-26/emacs-26.1-x86_64.zip

- There is no install process so just extract it to c:\ so you have a folder like c:\emacs-25-1

- open the c:\emacs-*-\bin folder

- copy the path to that directory

- add it to the PATH environment variable

- make a folder called ‘home’ folder in c:\ (or if you have one for msys skip this step). I do this as twice I’ve had windows shit up after updates and tell me my account isnt mine anymore and I hate fighting that shit

- Add a environemnt varable called HOME and set it to your new (or mysys) ‘home’ directory

- Basic setup is done but there are some things you will want.

Package manager

Dont download packages yourself, keeping them current is a pain, emacs comes with a package manager but the official package source is a little behind the times so we will add one that is much more used by the community ‘melpa’

See this video for how to use and install it: https://www.youtube.com/watch?v=Cf6tRBPbWKs

Short version is:

- hit ‘M-x’ [0] which will open the minibuffer at the bottom of emacs, type ‘customize’ and hit return.

- Type ‘package’ in the search bar and hit return.

- Scoot your cursor down to the arrow next to ‘package archives’ and hit return to open that subtree

- hit return on the ‘INS’ button and add ‘melpa’ as the archive name and ‘http://melpa.org/packages/’ as the url

- ‘C-x C-s’ to save (which means) hold down control and press ‘x’ then ‘s’

This will have edited your .emacs file in your ‘home’ directory, this is nice as lots of packages use the customize system and its often friendlier than searching docs for the right thing to edit.

Magit (reticently optional)

- restart emacs (rarely neccessary but I want to be sure evertyhing is fresh) and hit ‘M-x’ and type ‘list-packages’ and hit return

- give it a second to pull the package list and then install ‘magit’ (see that video for a guide of how to do that)

- This is the nicest damn git client, I’d install emacs for this even if I wasnt using it as a text editor.

Biased Baggers .emacs file additions (optional)

Open your .emacs file and paste the following at the top of the file

It looks like a lot just its just shit I’ve slowly accrued whilst using emacs.

;; so package manager is always good to go

(package-initialize)

;; utf8 is a good default

(setenv "LANG" "en_US.UTF-8")

;; when you start looking for files its nice to start in home

(setq default-directory "~/")

;; Using msys?

;; to make sure we can use git and stuff like that from emacs

(setenv "PATH"

(concat

;; Change this with your path to MSYS bin directory

"C:\\msys64\\usr\\bin;"

(getenv "PATH")))

;; Fuck that bell

(setq ring-bell-function #'ignore)

;; Turn off the menu bars, embrace the keys :p

(tool-bar-mode -1)

(menu-bar-mode -1)

;; Dont need emacs welcome in your face at every start

(setq inhibit-splash-screen t)

;; typing out 'yes' and 'no' sucks, use 'y' and 'n'

(fset `yes-or-no-p `y-or-n-p)

;; Highlight matching paren

(show-paren-mode t)

;; This might be out of date now, need to ask kristian

(setq column-number-mode t)

;; You can now used meta+arrow-keys to move between split windows

(windmove-default-keybindings)

;; Kill that bloody insert key

(global-set-key [insert] 'ignore)

;; Stop shift mouse click opening the font window

(global-set-key [(shift down-mouse-1)] 'ignore)

(global-set-key [(control down-mouse-1)] 'ignore)

;; C-c C-g now runs 'git status' in magit. Super handy

(global-set-key (kbd "\C-c \C-g") `magit-status)

;; Jump to matching paren. A touch hacky but I nabbed it from somewhere and has worked well enough for my stuff

;; probably something better out there though (this is language independent though).

;; Move to one bracket and hit 'Control )' to jump to the other bracket

(defun goto-match-paren (arg)

"Go to the matching if on (){}[], similar to vi style of %"

(interactive "p")

;; first, check for "outside of bracket" positions expected by forward-sexp, etc.

(cond ((looking-at "[\[\(\{]") (forward-sexp))

((looking-back "[\]\)\}]" 1) (backward-sexp))

;; now, try to succeed from inside of a bracket

((looking-at "[\]\)\}]") (forward-char) (backward-sexp))

((looking-back "[\[\(\{]" 1) (backward-char) (forward-sexp))

(t nil)))

(global-set-key (kbd "C-c )") `goto-match-paren)

ssh-agent hack (only needed if you have the issue I did)

Windows is a pita with some of this stuff. I have emacs on machine using the bash provided with git rather than a dedicate msys or whatever install and I got confused some ssh-agent issues. So I just made a windows shortcut that runs emacs from git bash and that helped. I get propted for my ssh-agent password on launch (which I do once a day) and then im free to work.

The shortcut just pointed to: "C:\Program Files\Git\git-bash.exe" -c "emacs-25.2"

Control Key (optional)

The control key is in the wrong place and it’s not good for the hand to keep having to reach for it. Let’s make capslock an extra control key.

make a file called control.reg and paste this in it

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Keyboard Layout]

"Scancode Map"=hex:00,00,00,00,00,00,00,00,02,00,00,00,1d,00,3a,00,00,00,00,00

Save it, run it and restart windows for it to take effect.

Done (optional :p)

Save and restart emacs again. Hopefully there are no errors (bug me if there are)

Remember that emacs is your editor, nothing is too stupid if it makes your experience better. For example I kept mistyping certain key combos so I bound the things I kept hitting instead to the same functions, it’s small but it makes me faster.

Thats all for now, seeya!

[0] (M stands for meta and is the alt key [the naming comes from the old lisp machine keyboards iirc)

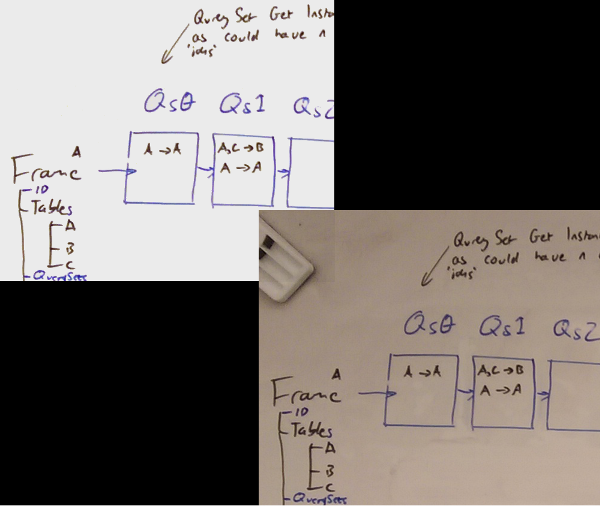

Cleaning up a photo of a whiteboard

I have small bad handwriting so some of the automatic methods out there really didnt work for me.

This is an excellent start:

https://gist.github.com/adsr303/74b044534914cc9b3345920e8b8234f4

In case that gist is gone:

Duplicate the base image layer.

Set the new layer's mode to "Divide".

Apply a Gaussian blur with a radius of about 50 (Filters/Blur/Gaussian Blur...)

Note: Alternatively just perform a ‘difference of gaussians’ on the image setting the first to radius 70 and the second to radius 1

I used a Gaussian blue with radius 70 in both directions. Mode is a dropdown near the top of the layers panel.

Next pick a background color and paint over the world offending background features; stuff like magnets and erasers.

Then flood fill with that color to normalize as much as possible.

Then flood fill with white. This should be pretty effective now.

Open up the hue/saturation (some name like that) tool up the saturation as the colors will be a bit washed out.

Finally open up brightness & contrast and drop the brightness a tiny bit.

That’s it. Much nicer to stare at and maintains all the features.

slime-enable-concurrent-hints

This is just me dumping something I keep forgetting. When you are live coding you often block the repl, you can call swanks handle-requests function to keep the repl which works but the minibuffer likely wont be showing function signatures (or other hints) any more. To enable this add this to your .emacs file and call using M-x slime-enable-concurrent-hints

(defun slime-enable-concurrent-hints ()

(interactive)

(setf slime-inhibit-pipelining nil))

Fuck yeah progress!

I’ve been on holiday for a week and it’s been great for productivity; the march to get stuff out my head before my new job goes rather well.

More docs

First stop rtg-math. This is my math library which for the longest time has not been documented I fixed that this week so now we have a bootload of reference docs. Once again staple is hackable enough that I could get it to produce signatures with all the type info too. Blech work but I can not think about that for a while. One note is that the ‘region’ portion of the api is undocumented as that part is still WIP.

SDFs

Next I wanted to get some signed distance functions into Nineveh (which is my library of useful gpu functions). SDFs are just a cool way to draw simple things and so with the help of code from shadertoy (props to iq, thoizer & Maarten to name a few) I made a nice little api for working with these functions.

One lovely thing was in the function that handles the shadows, it needs to sample the distance function at various points and rather than hardcoding it we are able to pass it in as a function. As a result we get things like this:

(defun-g point-light ((fn (function (:vec2) :float))

(p :vec2)

(light-position :vec2)

(light-color :vec4)

(light-range :float)

(source-radius :float))

(shaped-light fn

#'(length :vec2)

p

light-position

light-color

light-range

source-radius))

Here we have the point-light function, it takes a function from vec2 to float and some properties and then calls shaped-light passing in not just this but also a function that describes the distance from the light source (allowing for some funky shaped lights, though this is WIP).

This made me super happy as using first class functions in shaders as a means of composition had been a goal and seeing more validation of this was really fun.

Particle Graphs

One thing with these functions is when you want to visualize them it’s a tiny bit tricky as for each point you have a distance, which makes 3 dimensions. We can plot the distance as a color but it requires remapping negative values and the human eye isnt as good as differentiating color as position.

To help with this I made a little particle graph system.

define-pgraph is simply a macro that generates a pipelines that uses instancing to plot particles, however it was enough to make visualizing some stuff super simple. However as they are currently just additive particles it is difficult to judge which are in front sometimes. I will probably parameterize that in future.

Live recompiling lambda-pipelines

In making this I realized that there was a way to make CEPL’s lambda pipelines[0] be able to respond to recompilation of the functions they depend on without any visible api change for the users. This was clearly to tempting to pass up so I got that working.

The approach is as follows (a bit simplified but this is the gist):

(let ((state (make-state-struct :pipeline pline ;; [1]

:p-args p-args))) ;; [2]

(flet ((recompile-func ()

(setf (state-pipeline state) ;; [4]

(lambda (&rest args)

(let ((new-pipeline

(recompile-pipeline ;; [5]

(state-p-args state)))) ;; [6]

(setf (state-pipeline state) ;; [7]

new-pipeline)

(apply new-pipeline args))))));; [8]

(setf (state-recompiler state) #'recompile-func)

(values

(lambda (context stream &rest args) ;; [3]

(apply (state-pipeline state) context stream args))

state)))

[1] first we are going the take or pipeline function (pline) and box it inside a struct along with the arguments [2] (p-args) used to make the pipeline. If we hop down to the final lambda we see that this state object is lexically captured and the boxed function unboxed and called each time the lambda is called. Cool so we have boxed a lambda, however we need to be able to replace that inner pipeline whenever we want.

To do this we have the recompile-func, ostensibly we call this to recompile the inner pipeline function, however there is a catch: the recompile function could be called from any thread. Threads are not the friend of GL so we instead recompile-func actually replaces the boxed pipeline [4] with the actual lambda that will perform the recompile. As it is only valid for the pipeline to be called from the correct thread we can safely assume this. So next time the pipeline is called it’s actually the recompile lambda that will be called; this finally does the recompile [5] (using the original args we cached at the start [6]) and then replaces itself in the state object with the new pipeline function [7]. As the user was also expecting rendering to happen we call the new pipeline as well [8].

There are trade-offs of course, this indirection will cost some cycles and as we don’t know how the signature of the inner pipeline function will change we can’t type it as strictly as we would want to otherwise. However we can opt out of this behavior by passing :static to the pipeline-g function when we make a lambda pipeline, this way we get the speed (and no recompilation) when we need it (e.g. when we ship a game) and consistency and livecoding when we want it (e.g. during development).

Needless to say I’m pretty happy with this trade.

vari-describe

vari-describe is a handy function which returns the official glsl docs if available. This was handy but I have a growing library of gpu functions that naturally have no glsl docs.. what to do? The answer of course is to make sure docstring defined inside these are stored by varjo per overload so they can be presented. In the event of no docs being present we can at least return the signatures of the gpu function which, as long as the parameter names were good, can be quite helpful in itself.

This took a few rounds of bodging but works now. A nice thing also is that if you add the following to your .emacs file:

(defun slime-vari-describe-symbol (symbol-name)

"Describe the symbol at point."

(interactive (list (slime-read-symbol-name "Describe symbol: ")))

(when (not symbol-name)

(error "No symbol given"))

(let ((pkg (slime-current-package)))

(slime-eval-describe

`(vari.cl::vari-describe ,symbol-name nil ,pkg))))

(define-key lisp-mode-map (kbd "C-c C-v C-v") 'slime-vari-describe-symbol)

You can hold control and hit C V V and you get a buffer with the docs for the function your cursor was over.

This all together made the coding experience significantly nicer so I’m pretty stoked about that.

Tiling Viewport Manager

This is a project I tried a couple of years back and shelved as I got confused. In emacs I have a tonne of files/programs open in their respective buffers and the emacs window can be split into frames[9] in which the buffers are docked; this way of working is great and I wanted the same in GL.

The idea is to have a standalone library that handles layouting of frames in the window into which ‘targets’ (our version of buffers) are docked. These targets can either just be a CEPL viewport or could be a sampler or fbo.

As the target is itself just a class you can subclass it and provide your own implementation. One thing I was testing out was making a simple color-picker using this. Here’s a wip, but the system cursor is missing from this, the point being ‘picked’ is at the center of the arc.

Back to work

Back to work now so gonna lisping will slow down a bit. In general I’m just happy with this trend, it’s going in the right direction and it feels like this could get even more enjoyable as I build up Nineveh and keep hammering out bugs as people find them.

Peace all, thanks for wading through this!

[0] pipelines in CEPL are usually defined at the top level using defpipeline-g, lambda pipelines give us pipeline-g which uses Common Lisp’s compile function to generate a custom lambda that dispatches the GL draw.

[9] in fact emacs has these terms the other way around but the way I stated is usually eaiser for those more familiar with other tools

Lisping Furiously

This week has been pretty productive.

It started with looking into a user issue where compile times were revoltingly slow. It turns out they were generated very deeply nested chains of ifs and my code that was handling indenting was outstandingly bad, almost textbook example of how to make slow code. Anyway after getting that fixed up I spent a while scraping back milliseconds here and there.. there’s a lot of bad code in that compiler.

Speaking of that, I knock Varjo a bunch, and I’d never make it like this again, but as a vessel for learning it’s been amazing, most issues I hear about in compilers now I can at least hook somewhere in my head. It’s always ‘oh so that’s how real people do this’, very cool stuff. Also, like php, varjo is still unreasonably still providing me with piles of value; what it does is still what I wanted something to do.

After that I was looking into user extensible sequences and ended up hitting a wall in implementing something like extensible-sequences from sbcl. I really want map & reduce in Vari, but in static languages this seems to mean some kind of iterator.

It would really help to have something like interfaces but I can’t stand the idea of not being able to say how another user’s type satisfies the interface, so I started looking into adding traits :)

I was able to hack in the basics and implement a very shoddy map and could get stuff like this:

TESTS> (glsl-code

(compile-vert () :410 nil

(let* ((a (vector 1.0 2.0 3.0 4.0))

(b (mapseq #'(sin :float) a)))

(vec4 (aref b 0)))))

"// vertex-stage

#version 410

void main()

{

float[4] A = float[4](1.0f, 2.0f, 3.0f, 4.0f);

float[4] RESULT = float[4](0.0f, 0.0f, 0.0f, 0.0f);

int LIMIT = A.length();

for (int STATE = 0; (STATE < LIMIT); STATE = (STATE++))

{

float ELEM = A[STATE];

RESULT[STATE] = sin(ELEM);

}

float[4] B = RESULT;

vec4 g_GEXPR0_767 = vec4(B[0]);

gl_Position = g_GEXPR0_767;

return;

}

but the way array types are handled in Varjo right now is pretty hard-coded when I had it making separate functions for the for loop each function was specific to the size of the array. So 1 function for int[4], 1 function for int[100] etc.. not great.

So I put that down for a little bit and, after working on a bunch of issues resulting in unnecessary (but valid) code in the GLSL, I started looking at documentation.

This one is hard. People want docs, but they also don’t want the api to break. However the project is beta and documenting things reveals bugs & mistakes. So then you have to either document something you hate and know you will change tomorrow, or change it and document once.. For a project that is purely being kept alive by my own level of satisfaction it has to be the second; so I got coding again.

Luckily the generation of the reference docs was made much easier due to Staple which has a fantastically extensible api. The fact I was able to hack it into doing what I wanted (with some albeit truly dreadful code) was a dream.

So now we have these:

- Reference Docs for the Vari language

- Reference Docs for the Varjo compiler

- The start of a user guide for Varjo

Bloody exhausting.

The Vari docs are a mix of GLSL wiki text with Vari overload information & handwritten doc strings for the stuff from the Common Lisp portion of the api.

That’s all for now. On holiday for a week and I’m going to try get as much lisp stuff out of my head as possible. I want my lisp projects to be in a place where I’m focusing on fixes & enhancements, rather than features for when I start my new job.

Peace

It's been a long time

.. how have you been?

- glados

I fell out of the habit of writing these over christmas so it’s reall time for me to start this up again.

Currently I am reading ____ in preparation for my new job but I have also had some time for lisp.

On the lisp side I have been giving a bit more time to Varjo as there is a lot of completeness & stability things I need to work on over there. I’ve hacked a few extra sanity checks for qualifiers, however the checks are scattered around and it feels sucky so I really need to do a cleanup pass.

In good news I’ve also made a sweep through the CL spec looking for things that are worth adding to Vari. This resulted in this list and I’ve been able to add whole bunch of things. This really helps Vari feel closer to Common Lisp.

One interesting issue though is around clashes in the spec; lets take round for example. In CL round rounds to the nearest even, in GLSL it rounds in an implementation defined direction. GLSL does provide roundEven which more closely matches CL’s behavior. So the conundrum is, do we defined Vari’s round using roundEven or round. One way we may trip up CL programmers, the other way we trip up GLSL programmers, and also anyone porting code from GLSL to Vari without being aware of those rules. We can of course provide a fast-round function, but this doesnt neccessarily help with the issue of discoverability or ‘least surprise’.

I’m leaning towards keeping the GLSL meaning however, simply as we are already not writing true CL and our dialect makes sacrifices in the name of performance already. Maybe this is ok.

That’s all I’ve got for now, seeya all next week.

p.s. No stream this week as ferris is giving a rust talk here in Oslo.

p.p.s I also feel the ‘I haven’t been making lil-bits-of-lisp videos in ages’ guilt so I need to get back to that soon.

Raining Features

Every now and again people ask about if CEPL will support compute and I’ve always said that it would happen for years. The reason is that, because compute is not part of the GL’s draw pipeline, I thought there would be a tonne of compute specific changes that would need to be made. Turns out I was wrong.

Wednesday I was triaging some tickets in the CEPL repo and saw the one for compute. I was about to ignore it when I thought that it would be nice to read through the GL wiki to see just how horrendous a job it would be. 5 minutes later I’m rather disconcerted as it looked easy.. temptingly easy.

‘Luckily’ however I really want SSBOs for writing out of compute and that will be hard .. looks at gl wiki again .. Shit.

Ok so SSBOs turned out to be a much smaller feature than expected as well. So I gave myself 24 hours, from late Friday to late Saturday to implement as many new features (sanely) as I could.

Here are the results:

Sync Objects

GL only has one of these, the fence. CEPL now has support for this too.

You make a fence with make-gpu-fence

(setf some-fence (make-gpu-fence))

and then you can wait on the fence

(wait-on-gpu-fence some-fence)

optionally with a timeout

(wait-on-gpu-fence some-fence 10000)

also optionally flushing

(wait-on-gpu-fence some-fence 10000 t)

Or you can simply check if the fence has signalled

(gpu-fence-signalled-p some-fence)

Query Objects

GL has a range of queries you can use, we have exposed them as structs you can create as follows:

(make-timestamp-query)

(make-samples-passed-query)

(make-any-samples-passed-query)

(make-any-samples-passed-conservative-query)

(make-primitives-generated-query)

(make-transform-feedback-primitives-written-query)

(make-time-elapsed-query)

To begin querying into the object you need to make the query active. This is done with with-gpu-query-bound

(with-gpu-query-bound (some-query)

..)

After the scope of with-gpu-query-bound the message to stop querying is in the gpu’s queue, however the results are not available immediately. To check if the results are ready you can use gpu-query-result-available-p or you can use some of the options to pull-gpu-query-result, let’s look at that function now.

To get the results to lisp we use pull-gpu-query-result. When called with just a query object it will block until the results are ready:

(pull-gpu-query-result some-query)

We can also say not to wait and CEPL will try to pull the results immediately, if they are not ready it will return nil as the second return value

(pull-gpu-query-result some-query nil) ;; the nil here means don't wait

Compute

To use compute you simply make a gpu function which takes no non-uniform arguments and always returns (values) (a void function in C nomenclature) and then make a gpu pipeline that only uses that function.

(defstruct-g bah

(data (:int 100)))

(defun-g yay-compute (&uniform (woop bah :ssbo))

(declare (local-size :x 1 :y 1 :z 1))

(setf (aref (bah-data woop) (int (x gl-work-group-id)))

(int (x gl-work-group-id)))

(values))

(defpipeline-g test-compute ()

:compute yay-compute)

You can the map-g over this like any other pipeline..

(map-g #'test-compute (make-compute-space 10)

:woop *ssbo*)

..with one little difference. Instead of taking a stream of vertices we now take a compute space. This specify the number of ‘groups’ that will be working on the problem. The value has up to 3 dimensions so (make-compute-space 10 10 10) is valid.

We also soften the requirements around gpu-function names for the compute stage. Usually you have to specify the full name of a gpu function due to possible overloading e.g. (saturate :vec3) however as compute shaders can only take uniforms, and we don’t offer overloading based on uniforms, there can only be one with a given name. Because of this we allow yay-compute instead of (yay-compute).

SSBOs

The eagle eyed of you will have noticed the :ssbo qualifier in the woop uniform argument. SSBOs give you storage you can write into from a compute shader. Their api is almost identical to that of UBOs so I copied-pasted that code in CEPL and got SSBOs working. This code will most likely be unified again once I have fixed some details with binding however for now we have something that works.

This means we can take our struct definition from before:

(defstruct-g bah

(data (:int 100)))

and make a gpu-array

(setf *data* (make-gpu-array nil :dimensions 1 :element-type 'bah))

and then make an SSBO from that

(setf *ssbo* (make-ssbo *data*))

And that’s it, ready to pass to our compute shader.

.Phew.

Yeah. So all of that was awesome, I’m really glad to have a feature land that I wasnt expecting to add for a couple more years. Of course there are bugs, the most immediately obvious is that when I tried the example above I was getting odd gaps in the data in my SSBO

TEST> (pull-g *data*)

(((0 0 0 0 1 0 0 0 2 0 0 0 3 0 0 0 4 0 0 0 5 0 0 0 6

0 0 0 7 0 0 0 8 0 0 0 9 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0)))

The reason for this that I didnt understand layout in GL properly. This also means CEPL doesnt handle it properly and that I have a bug :) (this bug)[https://github.com/cbaggers/cepl/issues/193].

Fixing this is interesting as it means that, unless we force you to make a different type for each layout e.g.

(defstruct-g bah140 (:layout :std140)

(data (:int 100)))

(defstruct-g bah430 (:layout :std140)

(data (:int 100)))

..which feels ugly, we would need to support multiple layouts for each type. Which means the accessor functions in lisp would need to switch on this fact dynamically. That sounds slow to me when trying to process a load of foreign data quickly.

I also have a nagging feeling that the current way we marshal struct elements from c-arrays is not ideal.

This things together make me think I will be making some very breaking changes to CEPL’s data marshaling for the start of 2018.

This stuff needs to be done so it’s better we rip the band-aid off whilst we have very few known users.

News on that as it progresses.

Yay

All in all though, a great 24 hours. I’m currently learning how to extract std140 layout info from varjo types so that will likely be what I work on next Saturday.

Peace

Little bits of progress

Hi again! This last week has gone pretty well. Shared contexts have landed in CEPL master, the only host that supports them right now is SDL2 although I want to make a PR to CEPL.GLFW as it should be easy to support there also. Glop is proving a little harder as we really need to update the OSX support, I started poking at it but it’s gonna take a while and I’ve got a bunch of stuff on my plate right now.

I started looking at multi-draw & indirect rendering in GL as I think these are the features I want to implemented next. However I immediately ran into existing CEPL bugs in defstruct so I think the next few weeks are going to be spent cleaning that up and fixing the struct related issues from github.

AAAAges back I promised beginner tutorials for common lisp and I totally failed to deliver. I had been hoping the Atom support would get good enough that we could use that in the videos. Alas that project seems to have slowed down recently[0] and my guilt finally reached the point that I had to put out something. To that end I have started making a whole bunch of little videos on random bits of common lisp. Although it doesnt achieve what I’d really like to do with proper tutorials, I hope it will help enough people on their journey into the language.

That’s all for now, Peace.

[0] although I’m still praying it get’s finished

Transform Feedback

Ah it feels good to have some meat for this week’s writeup. In short transform feedback has landed in CEPL and will ship in the next[0] quicklisp release.

What is it?

Transform feedback is a feature that allows you to write data out from one of the vertex stages into a VBO as well as passing it on to the next stage. It also opens up the possibility of not having a fragment shader at all and just using your vertex shader like the function is a gpu based map function. For a good example of it’s use check out this great tutorial by the little grasshopper.

How is it exposed in CEPL?

In your gpu-function you simply add an additional qualifier to one or more of your outputs, like this:

(defun-g mtri-vert ((position :vec4) &uniform (pos :vec2))

(values (:feedback (+ position (v! pos 0 0)))

(v! 0.1 0 1)))

Here we see that the gl_position from this stage will be captured, now for the cpu side. First we make a gpu-array to write the data into.

(setf *feedback-vec4*

(make-gpu-array nil :element-type :vec4 :dimensions 100))

And then we make a transform feedback stream and attach our array. (transform feedback streams can have multiple arrays attached as we will see soon)

(setf *tfs*

(make-transform-feedback-stream *feedback-vec4*))

And finally we can use it. Assuming the gpu function above was used as the vertex stage in a pipeline called some-pipeline then the code will look like this:

(with-transform-feedback (*tfs*)

(map-g #'prog-1 *vertex-stream* :pos (v! -0.1 0)))

And that’s it! now the first result from mtri-vert will be written into the gpu-array in *feedback-vec4* and you can pull back the values like this:

`(pull-g *feedback-vec4*)`

If you add the feedback modifier to multiple outputs then they will all be interleaved into the gpu-array. However you might want to write them into seperate arrays, this can be done by providing a ‘group number’ to the :feedback qualifier.

(defun-g mtri-vert ((position :vec4) &uniform (pos :vec2))

(values ((:feedback 1) (+ position (v! pos 0 0)))

((:feedback 0) (v! 0.1 0 1))))

making another gpu-array

(setf *feedback-vec3*

(make-gpu-array nil :element-type :vec3 :dimensions 10))

and binding both arrays to a transform feedback stream

(setf *tfs*

(make-transform-feedback-stream *feedback-vec3*

*feedback-vec4*))

You can also use the same pipeline multiple times within the scope of with-transform-feedback

(with-transform-feedback (*tfs*)

(map-g #'prog-1 *vertex-stream* :pos (v! -0.1 0))

(map-g #'prog-1 *vertex-stream* :pos (v! 0.3 0.28)))

CEPL is pretty good at catching and explaining cases where GL will throw an error such as: not enough vbos (gpu-arrays) bound for the number of feedback targets -or- 2 different pipelines called within the scope of with-transform-feedback

More stuff

During this I ran into some aggravating issues relating to transform feedback and recompilation of pipelines, it was annoying to the point that I rewrote a lot of the code behind the defpipeline-g macro. The short version of this is that the code emitted is no longer a top-level closure and also that CEPL now has ways of avoiding recompilation when it can be proved that the gpu-functions in use havent changed.

I also found out that in some cases defvar with type declarations is faster than the captured values from a top level closure, even when they are typed. See here for a test you can run on your machine to see if you get the same kind of results.[1]

Shipping it

Like I said this code is in the branch to be picked up by the next quicklisp release. This feature will certainly have it’s corner cases and bugs but I’m happy to see this out and to have one less missing feature from CEPL.

Future work on transform feedback includes using the transform feedback objects introduced in GLv4 to allow for more advanced interleaving options and also nesting of the with-transform-feedback forms.

Next on the list for this month is shared contexts. More on that next week!

Ciao

[0] late november or early december

[1] testing on my mac has given different results, use defvar and packing data in a struct is faster than multiple defvars but slower than the top level closure. Luckily it’s still on the order of microseconds a frame (assuming 5000 calls per frame) but measurable. It’s interesting to see as packed in defvar was faster on my linux desktop ¯\_(ツ)_/¯ I’ll show more data when I have it.

Multiple Contexts are ALIVE..kinda

Allo again!

Its now November which means it’s NanoWrimo time again, each year I like to participate in spirit by picking a couple of features for projects I’m working on and hammer them out. This is well timed as I haven’t had much time for adding new things to CEPL recently.

The features I want to have by the end of the month are decent multi-context support and single stage pipelines.

Multi-Context

This stuff we have already talked about but I have bit the bullet and got coding at last. I have support for non-shared contexts now but not shared ones yet, the various hosts have mildly-annoyingly different approaches to abstracting this so I’m working on finding the sweet spot right now.

Regarding the defpipeline threading issues from last week I did in the end opt for a small array of program-ids per pipeline indexed by the cepl-context id. I can make this fast enough and the cost is constant so that feels like the right call for now. CEPL & Varjo were never written with thread safety in mind so it’s going to be a while before I can do a real review and work out what the approach should be, for now its a very ‘throw some mutexes in and hope’ situation, but it’s fine…we’ll get there :p

One side note is that all lambda-pipelines in CEPL are tied to their thread so don’t need the indirection mentioned above :)

Single Stage Pipelines

This is going to be fun. Up until now if you wanted to render a fullscreen quad you needed to:

- make a gpu-array holding the quad data

- make a stream for that gpu-array

- make a pipeline with:

- a vertex shader to put the points in clip space

- a fragment shader

The annoying thing is that the fragment shader was the only bit you really cared about. Luckily it turns out there is a way to do this with geometry shaders and no gpu-array and it should be portable for all GL versions CEPL supports. So I’m going to prototype this out on the stream on wednesday and, assuming it works, I’ll make this into a CEPL feature soon.

That covers making pipelines with only a fragment shader of course but what about with only a vertex stage? Well transform feedback buffers are something I’ve wanted for a while and so I’m going to look into supporting those. This is cool as you can then use the vertex stage for data processing kinda like a big pmap. This could be handy when you data is already in a gpu-array.

Future things

With transform feedback support a few possibilities open up. The first is that with some dark magic we could run a number of variants of the pipeline using transform feedback to ‘log’ values from the shaders, this gives us opportunities for debugging that weren’t there before.

Another tempting idea (which is also easier) is to allow users to call a gpu-function directly. This will

- make a temporary pipeline

- make a temporary gpu-array and stream with just the arguments given (1 element)

- run the code on the gpu capturing the result with a temporary transform-feedback buffer or fbo

- convert the values to lisp values

- dispose of are the temporaries

The effect is to allow people to run gpu code from the repl for the purposes of rapid prototyping. It obviously is useless in production because of all the overhead but being able to iterate in the repl with stuff like this could really be great.

That’ll do pig

Right, time to go.

Seeya soon