From the Burrow

Balls!

It’s been a good week for progress.

GL State Cache

The first big thing I achieved was to add GL state caching to CEPL. This has fixed some bugs, avoided some unnecessary state changes and generally made prototyping a bit easier.

For those not familiar that with OpenGL I’ll try explain the whats and whys now. When using OpenGL you are basically working with a crazy vending machine for graphics, you shove what you want draw in one hole, and then flick a bunch of switches and hit go. The switches control ‘how’ it is going to draw. One you have ‘hit go’ it will start rumbling and very soon will have a result. Whilst it is working you can prepare (and start) the next job, setting things up and flicking switches, GL keeps a queue of what it has to go.

The ‘vending machine’ in the above analogy is more formally called the ‘GL state machine’. The state machine has some ‘interesting’ aspects such as:

-

setting some properties on the state machine cause it to have to finish what it was doing before they can be applied, this blocks all queued jobs until that is done. This is really bad for performance

-

querying the properties of the machine is slow. Which means that although changing things can be fast, looking at what you are changing isnt :p

So we want to avoid changing things on the state machine when we can help it, but we can’t afford look at the machine to see if we can avoid changing things.. the solution here is a cache. In the cache we record what we think/know the state of GL is, then when we want to change things we can look at the cache to see if we already have the correct value, and if so, we can avoid requesting the change.

It took a surprisingly long time to get a solution I was happy with in CEPL as I was trying to find a balance between speed and usability. A cache like this can be super useful when debugging as you can look at it and get check your assumptions with the actual state of GL, however working with the raw GL object IDs is (generally) faster. This is also an area where I found the API shaped by the fact I’m in a dynamic programming language, it’s taken some time to find something I’m happy(ish) with.

IBL [0]

After that I went back to trying to implement PBR rendering. I had previously (with help) managed to get point lights working, however I really want to get image based lighting (IBL) in the mix. Now I’ve tried this a few times and have really struggled, partly because the resources available never have a complete example, they are almost always by people with enough rendering experience that they can assume some things to be obvious.. it is almost never obvious to me :p.

This time I went back and attacked this tutorial. Long story short: I don’t believe that the way he is convolving his env maps in the provided code is the way he is doing it in his renders. I made sure that my compiler was producing the EXACT same code as he had and I got completely useless results.

In a fit of desperation I went back onto github searching for any projects that have something working. Many use frameworks like 3js so while they are awesome, they we of limited help. However I did find the following:

-

A 3js project where they have a tonne of the different BRDFs implemented with consistent signatures. This will be a great resource for future learning.

-

A C++ project which has (what looks like) a full implementation of PBR with IBL, using pretty raw DirectX and DXUT (a DirectX equivalent of GLUT). This thing seems to be a perfect resource for me, it’s not an engine, just a project by some person looking to understand a single implementation of this. I’m stoked to be able to read this.

I immediately cloned that C++ project and started reading. They are using the same papers as I have been trying to learn from, however they clearly understood enough to fill in the gaps and (more importantly) pick a selection of approaches that worked together.

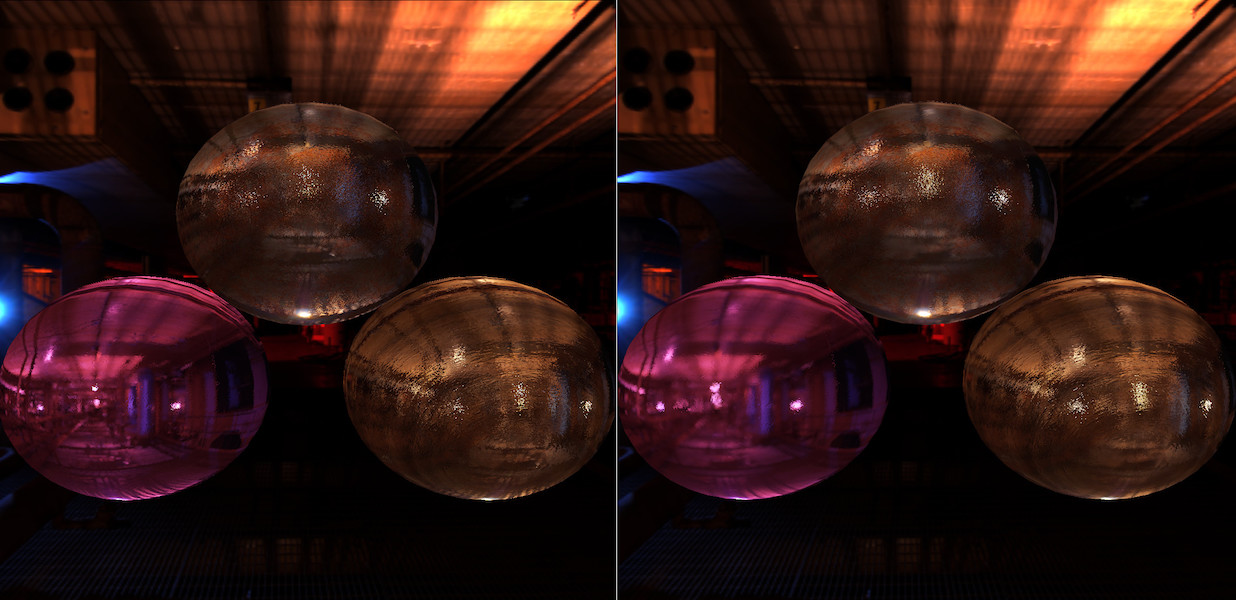

I rewrote my env map convolve shader based on what they had AND IT WORKED! The image below shows the same balls taking light from env maps made for two slightly different roughness values. It’s not much but the fact that you can see that the right version has a slightly blurrier ‘reflection’.

I’m now confident that I will be able to get the rest working. More on this in the coming weeks.

Other Stuff

Around this work a bunch of little things got done including:

In CEPL

- Fix a bunch of GL state bugs using the new cache feature

- Start migrating ugly global CEPL state to the cache

- Fix mipmap size bug in texture code

In Varjo

- Generate easier to read GLSL for symbols like π, φ, θ, etc

- Identify and write tests for a bug in function deduplication (not yet fixed). Basically the way I handle global user defined functions is crappy & I need to fix it.

In Nineveh (A library for useful GPU/Rendering functions)

- Add a function that takes a cubemap texture and makes 1 FBO for each mipmap level where the attachments of the fbo are the cube faces at that mipmap level.

That’s all folks

That’s this week done, I really hope I have something visual to show off next week.

Until then, Peace.

[0] Sorry but I won’t try to explain this section simply yet as I don’t understand it well enough yet.

I'm late!

Woops, I’m late posting this week. I haven’t got too much to show however there has been some progress.

The big piece of work was on the compiler. Let’s say we have this bit of code:

(let ((fn #'sin))

(funcall fn 10))

Which says:

line 1: Let there be a variable named fn which is bound to the function ‘sin’ line 2: Take the value bound to ‘fn’ and call it as a function with the argument ‘10’

Now as of last week this wouldn’t work in my compiler. The reason was that functions in GLSL can have overloads, for example pow can take a float,vex2,vec3 or vec4. So when I said #'sin (get the function named sin) which sin overload was I talking about?

This meant I had to write this:

(let ((fn #'(sin :float)))

(funcall fn 10))

Where #'(sin :float) reads as ‘the function named sin that takes one argument of type float’.

This worked but felt clumsy so my goal was to make the first code example work.

The way I went about it was to say:

When we compile a form like #’xyz where there are not argument types specified, work out all the functions named ‘xyz’ that can be called from that position in the code. Pass this set of functions around with the variable binding information in the compiler an then when the user writes a

funcalllook at the types of the arguments and work out which overload to use.

The nice thing was that the code to pick the most appropriate function for some args from a set of functions already existed, naturally as we need to do this for regular function calls. So that code was generalized a bit and recycled.

The rest took some time as I kept bumping into details that made this more complicated than I had hoped, mostly because my compiler was (and is) written on the fly without any real technical knowledge. I’ve certainly learned stuff that I want to apply to the compiler, but some of these things require large changes to the codebase and I’m not really ready to get into that yet. I have some time constraints as I want to give a talk on this in the near future so I really just need it to work well enough for that.

Other than this I speed-watched a couple of intro unity courses and started reading their docs. The courses were ok’ish I got what I needed from them but I’m hoping that wasnt meant to be good code style as it felt very messy. Time will tell.

That’s all for today, Ciao

Baked

This week I got first class functions into CEPL. It’s not release quality yet but it seems to work which is ace.

What I can now do is define a pipeline that takes a function as a uniform. It compiles this to typecheck it but GLSL doesn’t support first-class functions so the pipeline is marked as partial.

So if I have a gpu function I’m going to use as my fragment shader in some particle system:

(defun-g update-particle-velocities ((tex-coord :vec2)

&uniform (positions :sampler-2d)

(velocities :sampler-2d)

(animator (function (:vec4 :vec4) :vec4)))

(let* ((position (texture positions tex-coord))

(velocity (texture velocities tex-coord)))

;; calc the new velocity

(funcall animator positions velocities)))

I can make a partial pipeline:

(def-g-> update-velocities ()

:vertex (full-screen-quad :vec4)

:fragment (update-particle-velocities :vec2))

If I try and run this CEPL will complain that this is partial. Now let’s make a dumb function that slowly pulls all particles to the origin:

(defun-g origin-sink ((pos :vec4) (vel :vec4))

(let ((dif (- pos)))

(+ velocity (* (normalize dif) (sqrt (length dif)) 0.001))))

And now we can complete the pipeline:

(defvar new-pipeline (bake 'update-velocities :animator '(origin-sink :vec4 :vec4)))

I’m not happy with the syntax yet but the effect is to create a new pipeline with the uniform animator permanently set to our origin-sink function.

We can then map over that new pipeline like any other:

(map-g new-pipeline quad-verts :positions pos-sampler :velocities vel-sampler)

The advantage of this is that I can make a bunch of different ‘animator’ functions and make pipelines that use them just with bake. It still feels odd to me though so It’ll take some time to get used to and cleaned up.

One thing that is interesting me recently is that in livecoding it almost encourages more ‘static’ code. Composition is great for programming but to a degree makes things opaque to the experimenter. If you call a function that returns a function you are sitting in the repl with this function with very little insight into what it is/does. You have to maintain that mapping yourself. It may have something lexically captured, it may not.. I’m not sure where I’m going with this, expect that maybe it would be cool to be able to get the code that made the lambda while at the repl so you can get some context.

Anyhoo, this next week I need to work on the compiler a bit, clean up some of the stuff above & do spend some time studying.

Seeya

This and That

I seem to oscillate slowly between needing to create and needed to consume media. This week I’ve either jumped back to consume or I’m procrastinating.

Either way I’ve not been super productive, let’s start with the consumption:

Nom nom knowledge

-

Listened to Iceberg Slim’s biography - On stage one time Dave Chappele was talking about how the media industry worked and likened it to pimping. He said this book pretty much explained the system. It’s damn depressing.

- Watched some TED talks. Filtering out all the TEDX shit really makes the site more valuable

- craig venter unveils synthetic life - It’s now been 5 years since the first man-made lifeform was booted-up. Fucking incredible work. Everything this guy works on is worth your time

- your brain on communication - FMRIs taken on people being read stories. Very cool way of setting up the tests to reveal the layered nature of brain processing

- scientist_makes_ears_out_of_apples - less on the bleeding edge but very cool. Seems that stripping living things down totheir cellulose structure is possible in a home lab. I’d like to mess with this some day.

- how this fbi strategy is actually creating us based terrorists - Fucking infuriating but relieving to see how much info is available through the court proceedings

- The surprisingly logic minds of babies - I loved this one. Babies can infer based on probabilities. Very cool to see how these tests are constructed and good food for thought when musing on how brains work.

- What really matters at the end of life - Watch this. Is about designing for dying. Very moving, very important, I wish I could say all my family could leave on the terms he describes. Also badass is the fact that the talk is given by a cyborg and that isnt the point of the talk. I love this part of our future.

- The sore problem of prosthetic limbs - How to make comfortable sockets for prosthetic limbs. I’m fairly sure this can be done without the MRI step, which would make it much cheaper and more portable. I’ll have to look into this at some point

- How to read the genome and build a human being - Really cool to see how machine analysis of the geneome can let us predict some characteristics with high accuracy (height to within 5cm for example). A good intro talk

-

Adam Savage’s love letter to cosplay - Passion and community, I loved this talk.

- I’ve also been listening to a book called ‘The Information: A History, a Theory, a Flood’ again. It’s an outstanding walk through our history of understand what information is. From african drums, to morse code, to computers, to genes. This book rules. Read/Listen to it. I’ve been repeating 2 chapters this last week trying to bake them into my brain. The first was on entropy (and how information & entropy are the same), and the second is on genes and how information flows from and through us.

Making stuffs

The first order of business was to look at PBR. Previously I had got deferred point lights to work, however I failed hard at the IBL step. Luckily I rediscovered this tutorial as understood how it fit into what I was doing. Last time I had tried to stick to one paper as (in my ignorance) each approach felt very different to me.

I wrote the shader in lisp and immediately ran into a few bugs in my compiler. This sucked and the fixes I made werent satisfying. The problems were dumb side-effects of how i was doing my flow analysis, I’m pretty sure now that I want to get my compiler-time-values feature finished and then rewrite the code that uses the flow analyzer to use that instead.

I then ran into a few rendering issues. The first turned out to be a bug in my implementation of a function that samples from a cross texture (commonly used for environment maps). The next 2 I haven’t fixed yet:

- the env map filtering pass is super slow

- the resulting env map has horrifying banding

I checked the generated glsl and it looks fine. I’m struggling to work out how I’m screwing this up. I guess it could be that I have a bug in how I’m binding/unbinding textures and this is causing a flush..that could account for the speed…and maybe the graphical fuckups? I don’t know man.

Despite that it feels good to be back in that codebase. One thing that really stood out though was how much first-class functions could make the codebase cleaner and more flexible. I had started that feature partially for fun but more and more it seems it’s going to be very useful.

Given that I spent last night digging into that branch of my compiler. I decided that even without support for closures it would still be a good feature. So I did the following:

- Throw exception on attempts to pass a closure as a value. I’ve tried to make the error message friendly so people get what is happening

- fixed a couple of glsl generation bugs around passing functions as objects

I then spent a little time looking into how to generalize my compile-time-value feature, this will mean I can not only pass around functions but values user defined types. I’m going to use this for vector spaces. I realized that this doesn’t currently have enough power to cover all the things I could do with flow analysis, this is a bummer but at this point I had drunk too much wine to come up with good ideas so I called it a night.

Next week I need to crack the new version of the spaces feature, get that merge in and get back into the PBR.

.. Oh and Christmas :p

Depending on Types

Working on the compiler in the previous weeks got me in a mode where I was thinking about types again.

I’m pretty set on the idea of making static type checking for lisp, however I’m interested in not only being able to validate my code, but also to play with random types systems and maybe make my own. I need an approach to doing this kind of compile-time programming.

Macros give a super simple api, they are just a function that gets called when the code is being compiled. Say we make a macro that multiply some numbers at compile time (a bad usage but that doesnt matter for the example)

(defmacro multiply-at-compile-time (&rest numbers)

(assert (every #'numberp numbers)) ;; check all the arguments were number literals

(apply #'+ numbers)) ;; multiply the numbers

And now we have a function that calculates the numbers of seconds in a given number of days:

(defun seconds-in-days (number-of-days)

(* number-of-days (multiply-at-compile-time 60 60 24)))

When we compile this the macros need to be expanded, so for each form the compiler will look and see if is a list where the first element is the name of a macro. If it is then it calls the macro-function with the code in the argument positions. So it’ll go something like this:

- Looks at:

(defun seconds-in-days etc etc) - is

defunthe name of a macro? Yes, call macro-functiondefunand replace(defun seconds-in-days etc etc)with the result - the code is now:

(setf (function seconds-in-days) ;; NOTE: Not the actual expansion from my compiler, just an example

(lambda (number-of-days)

(* number-of-days (multiply-at-compile-time 60 60 24))))

- is

setfthe name of a macro? (let’s say no for the sake of this example) No? ok continue - Looks at:

(function seconds-in-days) - is

functionthe name of a macro? No? ok continue - this repeats until we reach

(multiply-at-compile-time 60 60 24) - is

multiply-at-compile-timethe name of a macro? YES, call the macro-functionmultiply-at-compile-timewith the arguments60,60and24and replace(multiply-at-compile-time 60 60 24)with the result.

The final code is now:

(setf (function seconds-in-days)

(lambda (number-of-days)

(* number-of-days 86400)))

Technically we have avoided multiplying 60, 60 and 24.. once again, this of course this is a TERRIBLE use of macros as these kinds of optimizations are things that all decent compilers do anyway.

The point here though is that we implement a function and then there is a mechanism in the language that knows how to use it. This makes it endlessly flexible.

So if I’m going to make a mechanism for hooking in types then I want a similar interface, implement something and have it be called.

Now I know nothing about how ‘real’ type-systems are put together so I bugged a dude at work who knows this stuff well. Olle, is ace, he’s currently writing his own dependently-typed language for low level programming and so clearly knows his shit. When I mentioned I wanted this to be fairly general he recommended I look at ‘bidirectional type checking’.

A quick google later I had a pile of pdfs, but one clearly stood out at being a best place for me to start and that is this one. It’s a very gentle intro to the subject and with a few visits to youtube & wikipedia I was able to get through it.

One take away from it is that we can drive the process with a couple of functions infer-type & check-type. infer-type takes a form (code) and an environment (explained below) and works out what the type of the form is. check-type takes a form, an environment an expected type, it then infers the type of the form and ensures it is ‘equal’ to the required type. The environment mentioned above is the object that stores the mapping between names and types, so if you define an int variable named jam then that relationship is stored in the environment

Unless I’ve massively misunderstood this paper this sounds like the start of a pretty simple api. Let’s fill in the gaps.

First we need to be able to define our own checker. Lets make up some syntax for that:

(defchecker simple-checker

:fact-type simple-type)

It will define a class for the environment and a class for our types. We could then define a couple of methods:

(defmethod infer (code (env simple-checker))

...)

(defmethod check (code (env simple-checker))

...)

I specialize the methods on the type of the environment, who’s name matches the name of the checker we defined.

Let’s now say we want to compile this code:

(let* ((x 10)

(y 20))

(+ x y))

First, we expand the code (I have made a expander that get’s it in a form that is useful for my checking)

(let ((x 10))

(let ((y 20))

(funcall #'+ x y)))

My system the walks the code trying to find out facts (types) about the code and replacing each form with the form (the <some-fact> <the form>). The system knows how to handle some of the fundamental lisp forms like let, funcall, if, etc but for the rest the infer & check methods are going to be called. For example infer is going to be called for 10 and 20 but the system will handle adding the bindings from x & the result from infer to the environment.

The result could look like this:

(the #<simple-type int>

(let ((x (the #<simple-type int> 10)))

(the #<simple-type int>

(let ((y (the #<simple-type int> 20.0)))

(the #<simple-type int>

(funcall (the #<simple-type func-int-int> #'+)

(the #<simple-type int> x)

(the #<simple-type int> y)))))))

where each of these #<simple-type int> is a instance of our simple-type class holding some info of the type it represents.

This type annotated code will then be the input to a function that turns it into valid common lisp code. The simplest version of this would simply remove the (the #<fact> ..) stuff from the code but a more interesting version would convert the type objects into lisp type names. So something like this:

(the (signed-byte 32)

(let ((x (the (signed-byte 32) 10)))

(the (signed-byte 32)

(let ((y (the (signed-byte 32) 20.0)))

(the (signed-byte 32)

(+ (the (signed-byte 32) x) (the (signed-byte 32) y)))))))

This is valid lisp, if you tell lisp to optimize this code it will produce very tight machine code. [0]

Usually lisp doesnt need anywhere near this number of type annotations to optimize code but having more doesn’t hurt :p

The result of this could be that we have a dynamic language where we can take chunks and statically type it, with checkers of our own devising, gaining all the benefits in checking and optimization that we would from any other static language.

.. That is of course if it works.. I could be talking out my arse here! :D

I need to do some more reading before diving back into this and I really should do some work on my PBR stuff again. So I will leave this project until next year. I’m just happy to have finally made a start on it.

Seeya

[0] Yet again, this is a trivial example but the idea extends to complex code. The advantage of having a readable post vastly outweighs being technically accurate

Hello progress my old friend

Ah this week was so much better, my brain and I were on the same team.

I made good progress in my compiler with first class functions. The way I implemented it is roughly as follows:

I make a class to represent compile-time values

(defclass compile-time-value (v-type)

(ctv))

It inherits from v-type as that is the class of my compiler’s types.

It has one slot called ctv that is going to store what the compile things the actual value is during compilation.

IIRC this associating of a value with a type is called ‘dependent types’. However I’m going to avoid that name as I don’t know nearly enough about that stuff to associate myself with it. I’m just going to call this compile-time-values or ctvs.

Next we need a type for functions.

(defclass function-spec (compile-time-values)

(arg-spec

return-spec))

Here we make a type that has a list of types for the arguments (arg-spec) and a list of types for the returns (return-spec). Return is a list as lisp supports multiple return values. Being a ctv the compiler can now associate values with this type.

Note we don’t have a name here as this is just the type of a function, not any particular one. In my compiler I have a class called v-function that describes a particular function. So there is a v-function for sin for example.

In lisp to get a function object we use the #' syntax. So #'print will give you the function named print. #'thing expands to (function thing) so in my compiler I defined a ‘special form’ called function that does the following:

- look up the

v-functionobject for that name - make an instance of

function-specwith the result of step1as thectv - use the result of step

2as the type of this form.

Nice! this means the specific function is now associated with this type and will be propagated around.

(let ((our-sin #'sin))

(funcall our-sin 10))

Later our compiler will get to that funcall expression. It will look at the type of our-sin and see the ctv associated with it. It will then transform (funcall our-sin 10) to (sin 10) and compile that instead.

Functions that take compile time values as arguments

We do a very simple hack when it comes to this. If we have something like this:

;; this takes a func from int to int and call it with the provided int

(defun some-func-caller ((some-func (function (:int) :int))

(some-val :int))

(funcall some-func some-val))

And we call it in the following code:

(labels ((our-local-func ((x :int))

(* x 2)))

(let ((our-val 20))

(some-func-caller #'our-local-func our-val)))

Then the compiler will swap out the (some-func-caller #'our-local-func our-val) call with a local version of the function with the compile time argument hardcoded

(labels ((our-local-func ((x :int))

(* x 2)))

(let ((our-val 20))

(let ((some-func #'our-local-func))

(labels ((some-func-caller ((some-val :int))

(funcall some-func some-val)))

(some-func-caller our-val)))))

The some-func var is in scope for the local function some-func-caller so the transform we mentioned earlier will just work. The rest is just a local function transform and the compiler already knew how to do that.

Things get more complicated with closures and I havent finished that. I can now pass closures to functions but I cannot return them from functions yet. I know how I could do it but it feels hacky and so I’m waiting for more inspiration before I try that part again.

Primed for types

With all this compiler work my brain was obviously in the right place to start things about static typing in general. Being able to define your own type-system for lisp is something I have wanted for ages, but as support for this isn’t built into the spec I’ve been trying to work out what the ‘best approach™’ is.

quick notes for those interested. Lisp has an expressive type system and a bunch of features to make serious optimizations possible. However it doesnt have something to let me define my own type system and use it to check my lisp code.

The problem boils down to macroexpansion. If you want to typecheck your code you want to expand all those macros so you are just working with vars, functions & special-forms (dont worry about these). However there isn’t a ‘macroexpand-all’ function in the spec[0]. There is a function for macroexpanding a macro form once, however this does not take care of things like the fact that you can define local, lexically scoped macros. This means there is an ‘expansion environment’ that is passed down during the expansion process and manipulating this is not covered by the spec.

There is however a tiny piece of fucking voodoo code that was written by one of the lisp compiler guys. It allows you to define a locally scope variable that is available at compile time within the scope of the macro. With this i can create and object that will act as my ‘expansion environment’ and let me have what I need.

Anyhoo, the other day I case up with a scheme for defining blocks of code that will be statically checked and how I will do macroexpansion. It’s not perfect, but it’s predicable and that will do for me.

I am going to make a library who’s current working title is checkmate. It will provide these static-blocks and within those you will be able to emit facts about the expressions in your code. For function calls it will then call a check-facts method with the arguments for the function and all the facts it has on them. You can overload this method and provide your own checking logic.

The facts are just object of type fact and can contain anything you like. And because you implement check-facts you can define any logic you like there.

This should give me a library which makes it easier to define a type system. I can subclass fact and call that type and inside check-facts I implement whatever type checking logic I like.

A while back I ported an implementation of the Hidley (Damas) Milner checking algorithm to lisp so my goal is to make something where I plug this in and get classic ML style type checking on lisp code.

Wish me luck!

Next?

I’m not sure, my next year is going to contain a lot of study so I hope I can keep on top of these projects as well. The last few weeks have certainly reminded me to trust my instincts on what I should be working on, and it’s good to feel ‘out of the woods’ on that issue.

Peace

[0] Although a bunch of implementation do provide one. I made a library to wrap those so technically I do have something that could work

Let it lie

I missed a week (SSSHHAAAME) because I didn’t get much of note done. I got mapping over tables working, along with destructuring of flat record data but the output -v- how much time I was sitting in front of the machine simply didnt add up.

I’ve decided to stop fighting my brain and just put the project down for a bit. This sucks as it means I fail my November goal but I just have to accept that I either need to force myself to do something my brain isn’t enjoying (which isn’t the best way to wind down after work) or do something else. At the very least I get to confirm things I have been learning about how I learn/work, so that is some kind of positive I can scrape from this.

With this accepted I started looking at first-class functions in my shader compiler (Varjo).

It’s been a month since I touched this, so I spent a little time re-familiarizing myself with the problem and then I got to work.

First order of business was to get funcall working with variables of function type that are in scope. Something like this:

(let ((x #'foo))

(let ((y x))

(funcall y 10)))

I got the logic working for the above and then I spent a few hours making some parts of my compiler more strict about types. Some areas were just too forgiving about whether you had to provide a type object or let you pass a type signature instead. This made some other code more complicated that it needed to be. This was a relic from a much older version of the compiler.

I then spent some time thinking about how to handle passing functions to functions. I can use my flow analyzer and multiple passes but I don’t want to use that hammer if things can be easier.

For example let’s take this:

(labels ((foo ((f (function (:int) :int))) ;; take a func from :int -> :int and call it with 10

(funcall f 10))

(bar ((x :int)) ;; some func from :int -> :int

(* x 2)))

;; do it

(funcall #'foo #'bar))

I can replace this the (funcall #'foo #'bar) with this:

(labels ((foo ()

(let ((f #'bar))

(funcall f 10))))

(funcall #'foo))

which will get turned into

(labels ((foo ()

(bar 10)))

(funcall #'foo))

This means I generate a new local function for each call-site of a function that takes functions. The compiler will any remove duplicate definitions.

At this point it’s worth pointing out one of the design goals of this feature. Predictability. This code is valid lisp:

(defun pick-func ()

(if (< some-var 10)

#'func-a

#'func-b))

(defun do-stuff ()

(funcall (pick-func) 10))

But at runtime we can’t pass functions around, so the best we could do for the above is to return an int and switch based on that.

int pick_func() {

return (some_var < 10) ? 0 : 1;

}

void do_stuff () {

switch (pick_func()) {

case 0:

func_a(10);

break;

case 1:

func_b(10);

break;

}

}

This would work but this pattern can be slow if used too much. For now Varjo instead chooses to disallow this and make you implement it yourself. This means there are less cases where you are guessing what the compiler is up to if your code is slow. The compiler will be able to generate very precise error messages to explain what is happening in those cases.

That’s all for now. I’ve also got a bunch of ideas for this that are still very nebulous, I’ll write more as they become concrete.

Ciao

Trudge

This week I have been kind of working on the data-structure thing I mentioned last week.

The reason that it is ‘kind of’ is that I am having a big problem focusing on actually coding it. Over the course of the weekend I procrastinated my way into watching 3 movies rather than coding.

It is an odd one as I love the idea of the project, I want it to exist and (at least in the abstract) I am really interested in the implementation. However, for whatever reason, I am just struggling to stay focused when coding the damn thing. I haven’t pinned down what the issue is, but if I do I’ll report it here.

OK so what did I do?

- A bunch of small benchmarks to prove premise to myself

- Defined the base types

- Worked out how the live redefining of types will work.

- Started work on record types (which will be the types of the columns of the tables)

The third one took the most time as I want both the old and new type to exist simultaneously, this will allow me to create the type and then sweep through the existing tables to try and upgrade them to the new type. If this fails for some (or the users halts it for some reason) then we can still keep working with both the old and new types. I had to prove to myself that I could do this in a way that wouldn’t just pollute the namespaces with crazy numbers of types & functions.

Another great side effect of this is that we can compile the types and functions with optimization set to high, this gives us the most accurate picture of how our code will behave when we ship it. We can do this as only the tables implementation calls these functions or uses these types directly, so there is almost no place that this will cause the user issues (unless they go out of their way to do that).

Sadly that’s all for this week. Let’s hope next week goes a little better

My plan for november

I’ve been writing up what I’ve been up to every week on a site called everyweeks.com. It was started by some friends and I’ve been trying to keep myself to it. However it has meant I’ve been bad as posting here.

Before now I’ve felt it rude to just dump a link here each week. I thought I should be writing fresh content for each site. But fuck it, if the choice is a weekly link to an article or a dead blog..I choose the link.

So here is this weeks one. It’s a plan of something I want to make this month.

Much reading but less code

TLDR: If you add powerful features to your language you must be sure to balance them with equally powerful introspection

I haven’t got much done this week, I have some folks coming from the uk soon so cleaning up and getting ready for that. I have decided to take on more projects though :D

Both revolve around shipping code which was the theme last week as well. At first I was looking at some basic system calls and it seemed that, if there wasn’t a good library for this already then I should make one. Turns out that was mostly me not knowing what I’m doing. I hadn’t appreciated that the posix api specifies itself based on certain types and that those types can be different size/layouts/etc on different platforms, which means that we can just define lisp equivalents without knowing those details. To get around this we need to use cffi’s groveler but this requires you to have a C compiler set up and ready to go. This, in my opinion, sucks as the user now has to think about more than the language they are trying to work in. Also because all libraries are delivered though the package manager you tend not to know about the C dependencies until you get an error during build which makes for pretty poor user experience.

To mitigate this what we can do is cache the output of the C program along with info on what platforms it is valid for. That second part is a little more fiddly as the specification that is given to the groveler is slightly different for different platforms and those difference are generally expressed with read-time conditionals. If people used reader conditionals in the specification then the cache is only valid if the result of the reader-conditionals matches. The problem is that the code that doesn’t match the condition is never even read so there is nothing to introspect.

One solution would be tell people not to use reader-conditionals and use some other way of specifying the features required, we would make this a standard and we would have to educate people on how to use it.

But #+ #- are just reader macros so we could replace them with our own implementation which would work exactly the same except that it would also record the conditions and the results of the conditions.

This turned out to be REALLY easy!

The mapping between a character pattern like #+ and the function it calls is kept in an object called a readtable. We don’t want to screw up the global readtable so we need our own copy.

(let ((*readtable* (copy-readtable)))

...)

*readtable* is the global variable where the readtable lives, so now an use of the lisp function read inside the scope of the let will be using our readtable (this is effect is thread local).

Next we replace the #+ & #- reader macros with our own function my-new-reader-cond-func:

(set-dispatch-macro-character #\# #\+ my-new-reader-cond-func *readtable*)

(set-dispatch-macro-character #\# #\- my-new-reader-cond-func *readtable*)

And that’s it! my-new-reader-cond-func is actually a closure over an object that the conditions/results are cached into but that’s just boring details.

The point is we can now introspect the reader conditions and know for certain what features were required in the spec file, and we do this without having to add any new practices for library writers.

This the reason for the TLDR at the top:

If you add powerful features to your language you must be sure to balance them with equally powerful introspection

Or at least trust programmers with some of the internals so they can get things done. You can totally shoot your foot off with these features, but that ship sailed with macros anyway.

I wrapped all this up in a library you can find here: https://github.com/cbaggers/with-cached-reader-conditionals

Other Stuff

Aside from this I:

-

Pushed a whole bunch of code from master to release for

CEPL,Varjo,rtg-math&CEPL.SDL2 -

Requested that that new

with-cached-reader-conditionalslibrary and two others (for loading images) are added to quicklisp (the lisp package manager) So more code shipping! yay!

Enough for now

Like I said, next week I’ll have people over so I won’t get much done, however my next goals are:

-

Add groveler caching to the FFI

-

Add functionality to the build system to copy dynamic libraries to the build directory of a compiled lisp program. This will need to handle the odd stuff OSX does with frameworks

Seeya folks, have a great week